Problem

I am trying to use ResNet pretrained model provided in torchvision.models, which is pre-trained on ImageNet where images are RGB. However, my dataset consists of CT scan images, which are grayscale. I am wondering how to feed my data into this model to fine-tune the parameters.

Three are several thoughts

- Fill the other two channels with all 0’s or 1’s.

- Copy the single channel images three times.

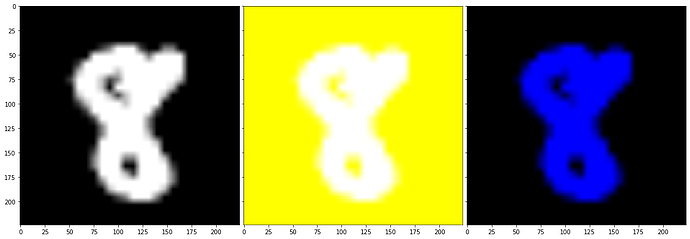

I tried to do those with MNIST dataset and got the following, where the first one corresponds to copying three times while the other two correspond to padding with all 1 and padding with all 0, respectively.

From this, it seems that copying the single channel three times make more sense since they look essentially the same as original image. But I am not sure someone encountered this problem and found a empirically reasonable solution.

Thank you in advance!