Hi,

I want to train a network in such a way that forward pass happens on one physical device and backprop happens on a physically different device only loss from forward prop will be transmitted to different device to carry out backprop. Both devices will have same architecture. How can I accomplish this funtionality?

I might be wrong, but I don’t think that’s easily doable at the moment, since the forward pass on the first device would also create all intermediate tensors on this device.

While you could move the complete model to a different device, the computation graph would still be on the first device.

Maybe there are some 3rd party libs or I’m missing something, so let’s wait for some experts to chime in.

I looked into how autograd works. The graph tracks which all operations led to the loss calculation and then when we do loss.backprop() the gradients for all the parameters that influenced loss calculation are calculated. I don’t exactly know how the operation history is stored under the hood. If I can share the operation history with other device then may be it might work. Right!?

Yes, the explanation is right from the high-level perspective, I think.

That is exactly what I’m not sure how one could achieve this.

Hi,

I tried to find a way around this problem but I am not sure why it did not work. I know that this is a “hack” and not recommended way.

I calculated the loss from network 1 which is present in device 1 with torch.no_grad(). Now the loss from network 1 (L1) and I am going to use it to backprop on Network 2 (same architecture) since the backward graph is not constructed I cannot directly do backprop on Network 2 and grad will be None. What I did was to generate a random tensor of the size required by Network 2 and some random numbers as labels. Then I ran forward prop with those randomly generated tensors and labels so that the backward graph is established. Now I calculate the loss of random label and model output (of course this does not make sense) but after the calculation of loss, I replace “data” (loss.data) with loss from network 1 assuming that the backprop will update weights based on replaced value. I wanted to know if this should work technically or not.

No, I don’t think this will work correctly.

The forward pass in Network2 used the fake inputs, which will create intermediate activations (outputs of layers) and store them. Replacing the loss.data is also quite hacky, but let’s skip this part.

Assuming you are able to replace the loss.data and call backward() on it, the intermediates using the fake input would be used to calculate the backward activations, which will be wrong.

Hi,

Thanks for your reply. “the intermediates using the fake input would be used to calculate the backward activations, which will be wrong”. What exactly are “backward activations” and are they created and stored during forward prop? Can I access and replace these intermediate activations mentioned in this sentence “which will create intermediate activations (outputs of layers) and store them” ???

By “backward activation” I meant the data gradient. In particular the weight gradient would need the stored forward activations as described in this tutorial by Michael Nielsen.

That is exactly what I think is not easily possible, since you cannot directly access these tensors (at least I don’t know how it would work with native PyTorch methods).

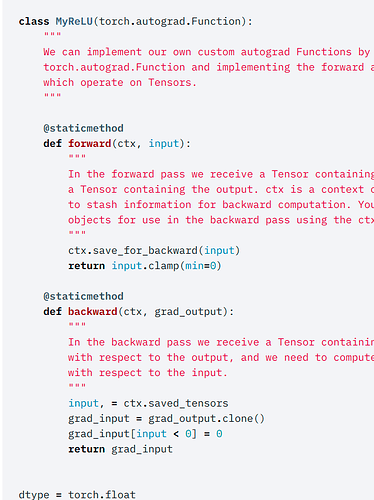

You might be able to use a hacky way of defining custom autograd.Functions, store the forward activations in the context, and move it to another device, but I don’t know how much work this would be.

Yes I was thinking of defining my own Autograd function like this and access the info stored for during forward prop that will be used during backprop

Yes, this is what I thought about in the last sentence.

I’m not completely sure at the moment, if this could be used in a nice and “automatic” way, but it might be the cleanest approach.

So if I have to write ResNet 18 then how should I go about it. Please let me know if you have some suggestions

I used simple neural network present in this tutorial Neural Networks — PyTorch Tutorials 1.7.1 documentation. And the I ran following code to traverse through backward graph.

def print_graph(g, level=0):

if g == None: return

print('*'*level*4, g)

try:

if hasattr(g, 'variable'):

if g.variable != None:

print(g.variable.shape)

except AttributeError:

print("Att Error")

for subg in g.next_functions:

print_graph(subg[0], level+1)

print_graph(loss.grad_fn, 0)

Then I print the attribute “variable” of the resulting function references. I got the following output.

<MseLossBackward object at 0x7f19050ca450>

**** <AddmmBackward object at 0x7f19050ca050>

******** <AccumulateGrad object at 0x7f1952175c90>

Parameter containing:

tensor([-0.0592, -0.0064, -0.0859, 0.0137, -0.0122, -0.0408, -0.0129, 0.0420,

0.0408, -0.0777], requires_grad=True)

******** <ReluBackward0 object at 0x7f190505b5d0>

************ <AddmmBackward object at 0x7f19529da3d0>

**************** <AccumulateGrad object at 0x7f1905049fd0>

Parameter containing:

tensor([-0.0852, 0.0711, -0.0529, 0.0253, -0.0687, -0.0191, 0.0109, -0.0637,

-0.0835, 0.0307, -0.0588, 0.0702, 0.0028, 0.0560, 0.0864, 0.0302,

0.0669, 0.0851, -0.0052, -0.0498, -0.0722, 0.0502, 0.0811, 0.0148,

-0.0395, 0.0683, -0.0101, -0.0277, 0.0355, -0.0374, 0.0797, 0.0109,

0.0004, -0.0398, 0.0050, -0.0326, 0.0070, -0.0093, -0.0353, 0.0369,

0.0107, 0.0800, -0.0273, 0.0206, 0.0458, -0.0124, 0.0437, 0.0592,

-0.0872, -0.0605, -0.0092, 0.0007, 0.0509, 0.0232, -0.0645, 0.0424,

0.0121, -0.0880, -0.0848, -0.0260, 0.0208, 0.0830, 0.0748, 0.0704,

-0.0264, -0.0332, -0.0344, 0.0117, -0.0481, -0.0593, 0.0569, -0.0851,

-0.0383, -0.0719, 0.0456, 0.0741, -0.0249, 0.0299, -0.0028, -0.0546,

-0.0836, 0.0771, -0.0716, 0.0334], requires_grad=True)

**************** <ReluBackward0 object at 0x7f1905049bd0>

******************** <AddmmBackward object at 0x7f190505b4d0>

************************ <AccumulateGrad object at 0x7f1905b4b050>

Parameter containing:

tensor([-0.0314, 0.0057, -0.0292, -0.0389, -0.0282, -0.0348, -0.0059, -0.0182,

0.0184, 0.0365, 0.0276, 0.0292, 0.0299, -0.0124, -0.0321, 0.0153,

-0.0116, 0.0002, -0.0154, 0.0284, 0.0350, 0.0179, -0.0235, -0.0189,

-0.0319, -0.0368, -0.0340, -0.0106, 0.0393, 0.0163, 0.0355, 0.0277,

-0.0204, 0.0361, 0.0399, 0.0074, 0.0073, 0.0337, 0.0009, -0.0266,

0.0328, 0.0182, 0.0235, 0.0150, 0.0174, -0.0252, 0.0327, -0.0085,

0.0032, -0.0318, -0.0034, 0.0003, -0.0363, 0.0283, -0.0210, 0.0122,

-0.0046, -0.0274, 0.0087, -0.0262, 0.0136, -0.0126, -0.0226, 0.0299,

0.0074, -0.0110, -0.0212, -0.0156, -0.0380, 0.0293, 0.0273, 0.0044,

-0.0208, -0.0056, 0.0083, 0.0263, 0.0098, -0.0169, 0.0315, -0.0224,

0.0150, -0.0282, 0.0058, 0.0238, -0.0277, 0.0146, -0.0050, 0.0312,

-0.0211, -0.0325, -0.0003, 0.0095, 0.0216, 0.0156, -0.0403, -0.0391,

0.0288, 0.0258, 0.0215, 0.0164, -0.0062, -0.0193, -0.0072, -0.0009,

0.0365, 0.0005, -0.0054, -0.0097, 0.0273, 0.0113, 0.0381, 0.0212,

-0.0156, -0.0144, 0.0042, 0.0195, 0.0251, 0.0311, -0.0224, -0.0157],

requires_grad=True)

************************ <ViewBackward object at 0x7f19541e04d0>

**************************** <MaxPool2DWithIndicesBackward object at 0x7f1904ffeb50>

******************************** <ReluBackward0 object at 0x7f1904ffe950>

************************************ <ThnnConv2DBackward object at 0x7f1904ffee90>

**************************************** <MaxPool2DWithIndicesBackward object at 0x7f1904ffef10>

******************************************** <ReluBackward0 object at 0x7f1904ffe990>

************************************************ <ThnnConv2DBackward object at 0x7f190505c8d0>

**************************************************** <AccumulateGrad object at 0x7f190505c910>

Parameter containing:

tensor([[[[-0.0876, 0.0887, 0.2264],

[-0.1105, -0.2922, -0.0815],

[-0.3276, 0.2306, -0.2369]]],

[[[ 0.2339, -0.1458, -0.1523],

[ 0.1509, 0.1113, -0.2124],

[ 0.0690, -0.0764, 0.0996]]],

[[[-0.0988, 0.2070, -0.0587],

[ 0.1129, 0.1283, -0.3057],

[-0.0881, -0.0724, 0.1704]]],

[[[-0.2162, -0.3303, 0.0373],

[-0.2590, 0.2704, 0.2639],

[ 0.1568, -0.1274, -0.2560]]],

[[[-0.1211, 0.0606, 0.3259],

[-0.2867, -0.3028, 0.2560],

[-0.0520, 0.2823, 0.2699]]],

[[[ 0.2249, -0.3091, 0.0165],

[ 0.2286, 0.1231, -0.0838],

[ 0.2699, 0.2960, 0.3096]]]], requires_grad=True)

**************************************************** <AccumulateGrad object at 0x7f190505c990>

This is partial output. Are those printed tensors the “backward activation” that are used during backprop ??

It could be, but I really don’t know, as I’ve never tried to traverse through the backward graph from the Python frontend.

Okay. These are actually the weights initialized by the network.