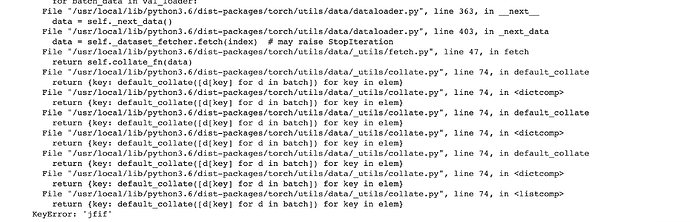

I am getting this error while using a data loader for the validation dataset. What can be the cause of this?

Can you please add the code that’s causing the error.

It seems like the error is caused by the default collate function, which is responsible for taking the lists that you input and batching them into torch tensors. I would presume that ‘jfif’ is a key in your DataSet class which is missing in some of the objects, which the DataLoader is picking up on.

So the code that takes the dataset class and constructs a DataLoader out of it will be very helpful.

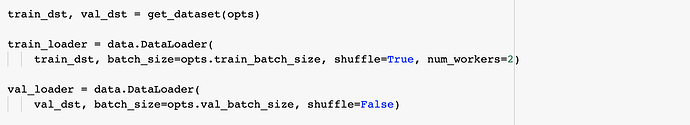

this is the place where dataset is transformed

def get_dataset(opts):

“”" Dataset And Augmentation

“”"

train_path = os.path.join(opts.data_root, ‘new_data/trainset’)

val_path = os.path.join(opts.data_root, ‘new_data/valset’)

# preprocess dataset

train_images = sorted(glob.glob(os.path.join(train_path, "images", "*.jpg")))

train_segs = sorted(glob.glob(os.path.join(train_path, "masks", "*.jpg")))

val_images = sorted(glob.glob(os.path.join(val_path, "images", "*.jpg")))

val_segs = sorted(glob.glob(os.path.join(val_path, "masks", "*.jpg")))

train_files = [{"image": img, "label": seg} for img, seg in zip(train_images, train_segs)]

val_files = [{"image": img, "label": seg} for img, seg in zip(val_images, val_segs)]

print("train_files", len(train_files))

print("val_files", len(val_files))

# for normalizing

mean, std = get_imagenet_mean_std()

train_transform = monai.transforms.Compose(

[

LoadPNGd(keys=["image", "label"]),

AsChannelFirstd(keys=["image", "label"], channel_dim=-1),

Lambdad(keys=['label'], func=lambda x: x[0:1] * 0.2125 + x[1:2] * 0.7154 + x[2:3] * 0.0721),

Resized(["image", "label"], spatial_size=(256, 256)),

ScaleIntensityd(keys=["image", "label"], minv=0.0, maxv=1.0),

RandAdjustContrastd(keys=["image"], prob=0.3),

RandGaussianNoised(keys=["image"], prob=0.5),

RandRotate90d(keys=["image", "label"], prob=0.5),

RandFlipd(keys=["image", "label"], prob=0.5),

RandRotated(keys=["image", "label"], range_x=180, range_y=180, prob=0.5),

RandZoomd(keys=["image", "label"], prob=0.2, min_zoom=1, max_zoom=2),

RandAffined(keys=["image", "label"], prob=0.5),

Rand2DElasticd(keys=["image", "label"], magnitude_range=(0, 1), spacing=(0.3, 0.3), prob=0.5),

NormalizeIntensityd(keys=["image"], subtrahend=mean, divisor=std),

ToTensord(keys=["image", "label"]),

Lambdad(keys=['label'], func=lambda x: (x > 0).float())

]

)

val_transform = monai.transforms.Compose(

[

LoadPNGd(keys=["image", "label"]),

AsChannelFirstd(keys=["image", "label"], channel_dim=-1),

Lambdad(keys=['label'], func=lambda x: x[0:1] * 0.2125 + x[1:2] * 0.7154 + x[2:3] * 0.0721),

Resized(["image", "label"], spatial_size=(256, 256)),

ScaleIntensityd(keys=["image", "label"], minv=0.0, maxv=1.0),

NormalizeIntensityd(keys=["image"], subtrahend=mean, divisor=std),

ToTensord(keys=["image", "label"])

]

)

# transforms

training_dataset = monai.data.PersistentDataset(train_files, transform=train_transform)

val_dataset = monai.data.Dataset(val_files, transform=val_transform)

return training_dataset, val_dataset

This is where data loader takes the dataset