Hello everyone,

For the last couple of days, I have been struggling with some code on how to train the french version of Bert (CamemBert) to classify some tweets (over 60.000) into 10 different classes. My biggest problem is that I don’t get any errors with the code below. It works fine and very well with other databases. With only 5 epochs, it gives higher results but with my database, it structures with a 0.5 F1 Score with even 10 epochs knowing that the 2 databases have the same structure which is even bizarre!

After mounting my drive and install all the needed packages, here is my code :

df = pd.read_csv('ALL.csv')

| commentaire | classement | |

|---|---|---|

| 0 | Nul à chier | Hate |

| 1 | VIDEO DE MERDE KFC EST TRES BIEN ET LE PDG VS … | Hate |

| 2 | il faut arreter de faire des videos clash roya… | Hate |

| 3 | 9 ans ou pas le branleur je me fous en slip ,j… | Hate |

| 4 | Nul | Hate |

#change all the label to int

possible_labels = df.classement.unique()

label_dict = {}

for index, possible_label in enumerate(possible_labels):

label_dict[possible_label] = index

label_dict

Neutral 50000

Hate 3000

Homophobia 3000

Mockery 3000

Racism 3000

Troll 3000

Moral Harassment 3000

Sexual Harassment 3000

Threat 3000

Insult 3000

Name: classement, dtype: int64

#split data : training \ test

from sklearn.model_selection import train_test_split

X_train, X_val, y_train, y_val = train_test_split(df.index.values,

df.label.values,

test_size=0.15,

stratify=df.label.values)

df['data_type'] = ['not_set']*df.shape[0]

df.loc[X_train, 'data_type'] = 'train'

df.loc[X_val, 'data_type'] = 'val'

df.groupby(['classement', 'label', 'data_type']).count()

{‘Hate’: 0,

‘Homophobia’: 1,

‘Insult’: 2,

‘Mockery’: 3,

‘Moral Harassment’: 5,

‘Neutral’: 6,

‘Racism’: 7,

‘Sexual Harassment’: 8,

‘Threat’: 9,

‘Troll’: 10,

nan: 4}

#I think the problem cames from here

#I tied to convert the data into lists

msgTrain = df[df.data_type=='train'].commentaire.astype(str).values.tolist()

msgVal = df[df.data_type=='val'].commentaire.astype(str).values.tolist()

tokenizer = CamembertTokenizer.from_pretrained('camembert-base', do_lower_case=True)

encoded_data_train = tokenizer.batch_encode_plus(msgTrain,

add_special_tokens=True,

return_attention_mask = True,

max_length=50,

padding=True,

truncation=True,

return_tensors = 'pt'

)

encoded_data_val = tokenizer.batch_encode_plus(msgVal,

add_special_tokens=True,

return_attention_mask = True,

max_length=50,

padding=True,

truncation=True,

return_tensors = 'pt'

)

input_ids_train = encoded_data_train['input_ids']

attention_masks_train = encoded_data_train['attention_mask']

labels_train = torch.tensor(df[df.data_type=='train'].label.values)

input_ids_val = encoded_data_val['input_ids']

attention_masks_val = encoded_data_val['attention_mask']

labels_val = torch.tensor(df[df.data_type=='val'].label.values)

dataset_train = TensorDataset(input_ids_train, attention_masks_train, labels_train)

dataset_val = TensorDataset(input_ids_val, attention_masks_val, labels_val)

model = CamembertForSequenceClassification.from_pretrained("camembert-base",

num_labels=len(label_dict),

#output_attentions=False,

#output_hidden_states=False

)

from torch.utils.data import DataLoader, RandomSampler, SequentialSampler

batch_size = 32

dataloader_train = DataLoader(dataset_train,

sampler=RandomSampler(dataset_train),

batch_size=batch_size)

dataloader_validation = DataLoader(dataset_val,

sampler=SequentialSampler(dataset_val),

batch_size=batch_size)

from transformers import AdamW, get_linear_schedule_with_warmup

#optimizer

optimizer = AdamW(model.parameters(),

lr=1e-3,

eps=1e-6)

epochs = 10

scheduler = get_linear_schedule_with_warmup(optimizer,

num_warmup_steps=0,

num_training_steps=len(dataloader_train)*epochs)

from sklearn.metrics import f1_score

def f1_score_func(preds, labels):#F1

preds_flat = np.argmax(preds, axis=1).flatten()

labels_flat = labels.flatten()

return f1_score(labels_flat, preds_flat, average='weighted')

def accuracy_per_class(preds, labels):#accurancy

label_dict_inverse = {v: k for k, v in label_dict.items()}

preds_flat = np.argmax(preds, axis=1).flatten()

labels_flat = labels.flatten()#all class in one vec

for label in np.unique(labels_flat):

y_preds = preds_flat[labels_flat==label]

y_true = labels_flat[labels_flat==label]

print(f'Class: {label_dict_inverse[label]}')

print(f'Accuracy: {len(y_preds[y_preds==label])}/{len(y_true)}\n')

import random

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = model.to(device)

def evaluate(dataloader_val):

model.eval()

loss_val_total = 0

predictions, true_vals = [], []

for batch in dataloader_val:

batch = tuple(b.to(device) for b in batch)

inputs = {'input_ids': batch[0],

'attention_mask': batch[1],

'labels': batch[2],

}

with torch.no_grad():

outputs = model(**inputs)

loss = outputs[0]

logits = outputs[1]

loss_val_total += loss.item()

logits = logits.detach().cpu().numpy()

label_ids = inputs['labels'].cpu().numpy()

predictions.append(logits)#recupr

true_vals.append(label_ids)

loss_val_avg = loss_val_total/len(dataloader_val)

predictions = np.concatenate(predictions, axis=0)

true_vals = np.concatenate(true_vals, axis=0)

return loss_val_avg, predictions, true_vals

for epoch in tqdm(range(1, epochs+1)):

model.train()

loss_train_total = 0

progress_bar = tqdm(dataloader_train, desc='Epoch {:1d}'.format(epoch), leave=False, disable=False)

for batch in progress_bar:

model.zero_grad()

batch = tuple(b.to(device) for b in batch)

inputs = {'input_ids': batch[0],

'attention_mask': batch[1],

'labels': batch[2],

}

outputs = model(**inputs)

loss = outputs[0]

loss_train_total += loss.item()

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

scheduler.step()

progress_bar.set_postfix({'training_loss': '{:.3f}'.format(loss.item()/len(batch))})

torch.save(model.state_dict(), f'finetuned_BERT_epoch_{epoch}.model') #save epoch stat

tqdm.write(f'\nEpoch {epoch}')#num epoch

loss_train_avg = loss_train_total/len(dataloader_train)

tqdm.write(f'Training loss: {loss_train_avg}')

val_loss, predictions, true_vals = evaluate(dataloader_validation)

val_f1 = f1_score_func(predictions, true_vals)

tqdm.write(f'Validation loss: {val_loss}')

tqdm.write(f'F1 Score (Weighted): {val_f1}')

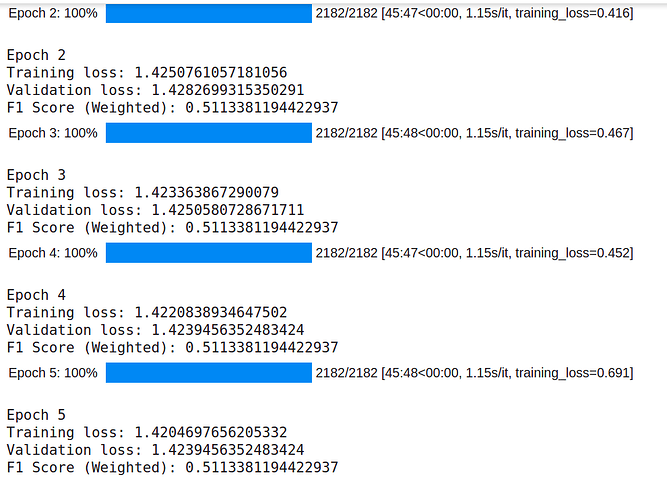

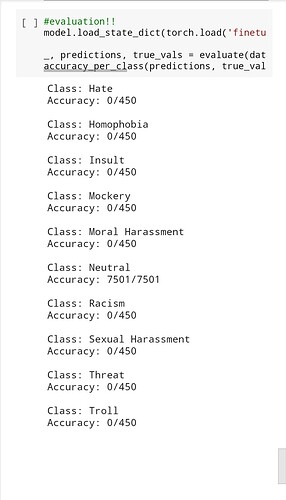

Results are all the same in every epoch like this :

That is all. Can anyone help me please ? maybe I did miss something important!