Hey there,

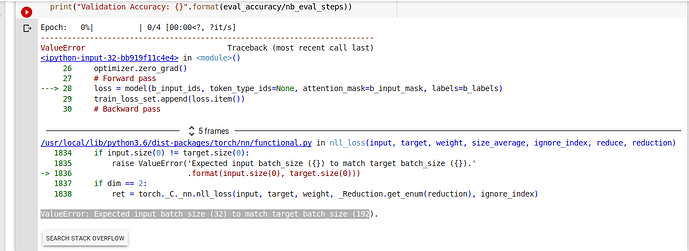

I am trying to use BERT for the multilabel classification task. But I got this unexpected error below.

I set the batch_size to 32 in train and validation data loader

Here is the code below.

train_loss_set =

epochs = 4

for _ in trange(epochs, desc=“Epoch”):

model.train()

tr_loss = 0

nb_tr_examples, nb_tr_steps = 0, 0

for step, batch in enumerate(train_dataloader):

batch = tuple(t.to(device) for t in batch)

b_input_ids, b_input_mask, b_labels = batch

optimizer.zero_grad()

loss = model(b_input_ids, token_type_ids=None, attention_mask=b_input_mask, labels=b_labels)

train_loss_set.append(loss.item())

loss.backward()

optimizer.step()

tr_loss += loss.item()

nb_tr_examples += b_input_ids.size(0)

nb_tr_steps += 1

print(“Train loss: {}”.format(tr_loss/nb_tr_steps))

model.eval()

eval_loss, eval_accuracy = 0, 0

nb_eval_steps, nb_eval_examples = 0, 0

for batch in validation_dataloader:

batch = tuple(t.to(device) for t in batch)

b_input_ids, b_input_mask, b_labels = batch

with torch.no_grad():

logits = model(b_input_ids, token_type_ids=None, attention_mask=b_input_mask)

logits = logits.detach().cpu().numpy()

label_ids = b_labels.to(‘cpu’).numpy()

tmp_eval_accuracy = flat_accuracy(logits, label_ids)

eval_accuracy += tmp_eval_accuracy

nb_eval_steps += 1

print(“Validation Accuracy: {}”.format(eval_accuracy/nb_eval_steps))