Hi,

I’m using PyTorch to implement a CNN model for collaborative filtering but I got an error like this:

Given groups=1, weight of size 1 64 9 9, expected input[1, 1, 16, 16] to have 64 channels, but got 1 channels instead .

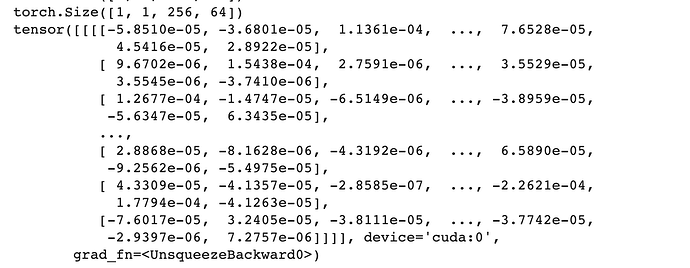

Input for the model is a tensor having torch.Size([1, 1, 256, 64])

Code for the CNN:

CNN_modules = []

CNN_modules.append(nn.Conv2d(1, 64, (225, 33)))

CNN_modules.append(nn.ReLU())

CNN_modules.append(nn.Conv2d(64, 1, (17, 17)))

CNN_modules.append(nn.ReLU())

CNN_modules.append(nn.Conv2d(64, 1, (9, 9)))

CNN_modules.append(nn.ReLU())

CNN_modules.append(nn.Conv2d(64, 1, (5, 5)))

CNN_modules.append(nn.ReLU())

CNN_modules.append(nn.Conv2d(64, 1, (3, 3)))

CNN_modules.append(nn.ReLU())

CNN_modules.append(nn.Conv2d(64, 1, (1, 1)))

CNN_modules.append(nn.ReLU())

I want to keep 64 features for all hidden conv layers. I think the error may come from the data because this tensor is not a grayscale image. Can anybody help me to solve the problem? Thank you so soo much !!!

This is data of the input tensor: