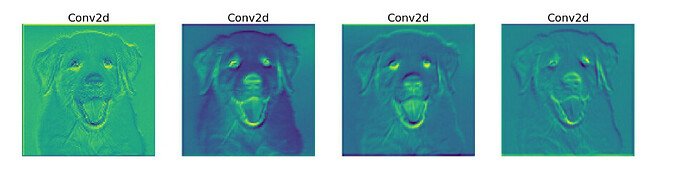

Hello, I am new to using pytorch, but I manage to get almost everything working, but now I am using Googlenet pretrained and the model works fine, the problem is that I need to see the visualization layers and verify that the model is considering the relevant aspects. , what I want is something like the following image

The problem is that when I try to iterate over the convolutional layer it gives me this error

Given groups=1, weight of size [96, 192, 1, 1], expected input[1, 64, 125, 125] to have 192 channels, but got 64 channels instead

This is a part of the model architecture.

[GoogLeNet(

(conv1): BasicConv2d(

(conv): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(maxpool1): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

(conv2): BasicConv2d(

(conv): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(conv3): BasicConv2d(

(conv): Conv2d(64, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(maxpool2): MaxPool2d(kernel_size=3, stride=2, padding=0, dilation=1, ceil_mode=True)

(inception3a): Inception(

(branch1): BasicConv2d(

(conv): **Conv2d(**192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch2): Sequential(

(0): BasicConv2d(

(conv): Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicConv2d(

(conv): Conv2d(96, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(branch3): Sequential(

(0): BasicConv2d(

(conv): Conv2d(192, 16, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(16, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicConv2d(

(conv): Conv2d(16, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(branch4): Sequential(

(0): MaxPool2d(kernel_size=3, stride=1, padding=1, dilation=1, ceil_mode=True)

(1): BasicConv2d(

(conv): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(inception3b): Inception(

(branch1): BasicConv2d(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch2): Sequential(

(0): BasicConv2d(

(conv): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicConv2d(

(conv): Conv2d(128, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(branch3): Sequential(

(0): BasicConv2d(

(conv): Conv2d(256, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicConv2d(

(conv): Conv2d(32, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

and stops at the fifth convolutional Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)

i have a list of all the convolutionals like :

[Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False), Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False), Conv2d(64, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False), Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False), Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1), bias=False), …]

and i iterate over them using this code:

outputs = []

names = []

for layer in conv_layers[0:]:

print(layer)

image = layer(image)

outputs.append(image)

names.append(str(layer))

print(len(outputs))

for i in outputs:

print(i.shape)

Somebody could help me? I have no idea of the problem. I can see that the convolutional that is giving me trouble is part of a sequential, but I don’t know if that is the problem.

Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

Conv2d(64, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

Conv2d(192, 96, kernel_size=(1, 1), stride=(1, 1), bias=False)

RuntimeError Traceback (most recent call last)

in

3 for layer in conv_layers[0:]:

4 print(layer)

----> 5 image = layer(image)

6 outputs.append(image)

7 names.append(str(layer))

2 frames

/usr/local/lib/python3.7/dist-packages/torch/nn/modules/conv.py in _conv_forward(self, input, weight, bias)

452 _pair(0), self.dilation, self.groups)

453 return F.conv2d(input, weight, bias, self.stride,

→ 454 self.padding, self.dilation, self.groups)

455

456 def forward(self, input: Tensor) → Tensor:

RuntimeError: Given groups=1, weight of size [96, 192, 1, 1], expected input[1, 64, 125, 125] to have 192 channels, but got 64 channels instead