Hey everyone,

I’m working on a project that deals with a huge amount of data. I created a custom Dataset for the dataloader but while there’s a huge GPU memory consumption, GPU util is at 0%

this is the Custom Dataset class:

class CustomDataset(Dataset):

def __init__(self, filenames, batch_size, transform=None):

self.filenames= filenames

self.batch_size = batch_size

self.transform = transform

def __len__(self):

return int(np.ceil(len(self.filenames) / float(self.batch_size))) # Number of chunks.

def __getitem__(self, idx): #idx means index of the chunk.

# In this method, we do all the preprocessing.

# First read data from files in a chunk. Preprocess it. Extract labels. Then return data and labels.

batch_x = self.filenames[idx * self.batch_size:(idx + 1) * self.batch_size] # This extracts one batch of file names from the list `filenames`.

data = []

labels = []

for exp in batch_x:

temp_data = []

for file in exp:

temp = np.loadtxt(open(file), delimiter=",") # Change this line to read any other type of file

temp = scaler.fit_transform(temp)

temp_data.append(temp) # Convert column data to matrix like data with one channel

pattern = exp[0][16:20] # Pattern extracted from file_name

for column in label_file:

if re.match(str(pattern), str(column)): # Pattern is matched against different label_classes

labels.append(np.asarray(label_file[column]))

data.append(temp_data)

data = np.asarray(data) # Because of Pytorch's channel first convention

if self.transform:

data = self.transform(data)

data = data.reshape(data.shape[0], data.shape[1] * data.shape[2] * data.shape[3])

labels = np.asarray(labels)

# The following condition is actually needed in Pytorch. Otherwise, for our particular example, the iterator will be an infinite loop.

# Readers can verify this by removing this condition.

if idx == self.__len__():

raise IndexError

return data, labels

I decided to batch the data already here but even if I do it in Dataloader it does not change a thing. the filenames is a list of 50k lists each containing 48 csv paths needed for each label that are then flattened.

calling Dataloader with num_workers (os.cpu_count()) and pin_memory=True doesnt change a thing.

when using dummy variables instead the GPU works and when I try to run that function outside to save an .npy files the kernel dies so it’s definitely a bottleneck from the data.

when I try to time the data assignment:

for i, data in enumerate(train_dataloader, 0):

start_time = time.time()

# Get and prepare inputs

inputs, targets = data

inputs, targets = inputs.to(dev), targets.to(dev)

inputs, targets = inputs.float(), targets.float()

print(time.time()-start_time)

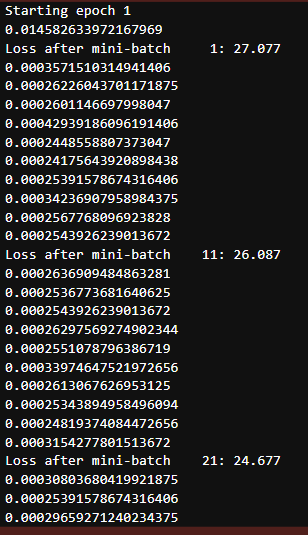

I get :

anyone has an idea of how I can deal with this?

Thanks a lot ![]()