class GraphConvolution(nn.Module):

def __init__(self, in_features, out_features, dropout=0., act=F.relu):

super(GraphConvolution, self).__init__()

self.in_features = in_features

self.out_features = out_features

self.dropout = dropout

self.act = act

self.weight = glorot_init(in_features, out_features)

self.reset_parameters()

def reset_parameters(self):

torch.nn.init.xavier_uniform_(self.weight)

def forward(self, input, adj):

input = F.dropout(input, self.dropout, self.training)

support = torch.mm(input, self.weight)

output = torch.spmm(adj, support)

output = self.act(output)

return output

def __repr__(self):

return self.__class__.__name__ + ' (' \

+ str(self.in_features) + ' -> ' \

+ str(self.out_features) + ')'

device = torch.device(“cuda:0” if torch.cuda.is_available() else “cpu”)

def collate(samples):

g_list, f_list = zip(*(samples))

batched_graph = dgl.batch([g.to(device) for g in g_list])

features = torch.cat([f.to(device) for f in f_list])

return batched_graph, features

train_data_loader = DataLoader(training_graphs, batch_size= batch_size, shuffle=True, collate_fn=collate)

model = GVAE(input_feat_dim = 156,

hidden_dim1 = vae_d1,

hidden_dim2 = vae_d2,

emb_weights = embedding_vector,

dropout=0.0)

model.to(device)

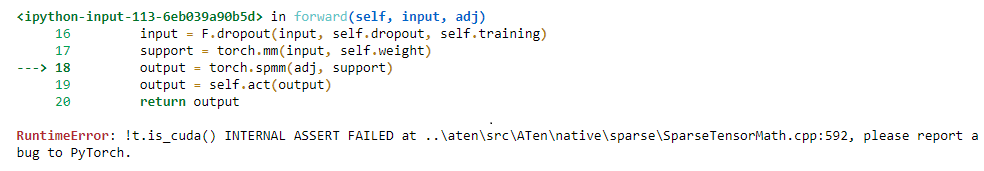

[quote=“sungtae, post:1, topic:19965, full:true”]

Dear members,

When I a Autoencoder on GPU using GCN i m having this bug. Anyone knows how to resolve this bug?

Thanks