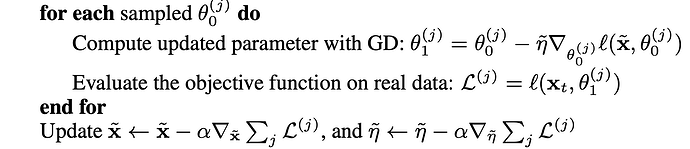

I’m trying to implement Dataset Distillation using basic optimizers and autograd. A crucial part of the algorithm is training the network on a small dataset, evaluating the loss of this network on another dataset, and calculating the gradient of this final loss wrt the training data

When I try to directly implement this, by using an optimizer to update weights and

torch.nn.loss for loss, autograd gives the following error:

One of the differentiated Tensors appears to not have been used in the graph

How can this be implemented using basic autograd and optimisers?