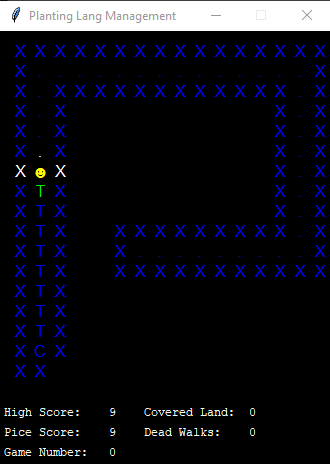

Hi, I am trying to make a simple AI to learn to walk down halls. What is to its left right above and below are its inputs. and the output actions are move left right up or down. The reward System is as follows.

- -1 if tries to walk into a wall

- -1 if walks over a space already walked over

- +1if walks over a space not yet walked over

I am trying to use the following loss function based of a TRPO algorithm to train the neural network:

![]()

I am able to calculate the loss number. However I am not able to run loss.backwards with out the following error:

element 0 of tensors does not require grad and does not have a grad_fn

Below is the code I am using:

model.opt.zero_grad()

qEval = model.forward(stateBatch) # Gets Gradients

qEval = torch.stack([torch.tensor([a.max()]) for a in qEval])

qValsNext = model.forward(nextStateBatch)

qValsNext = torch.stack([torch.tensor([a.max()]) for a in qValsNext])

loss = (rewardBatch + qValsNext - qEval)**2

loss.backward()

model.opt.step()

Thank you for the help!