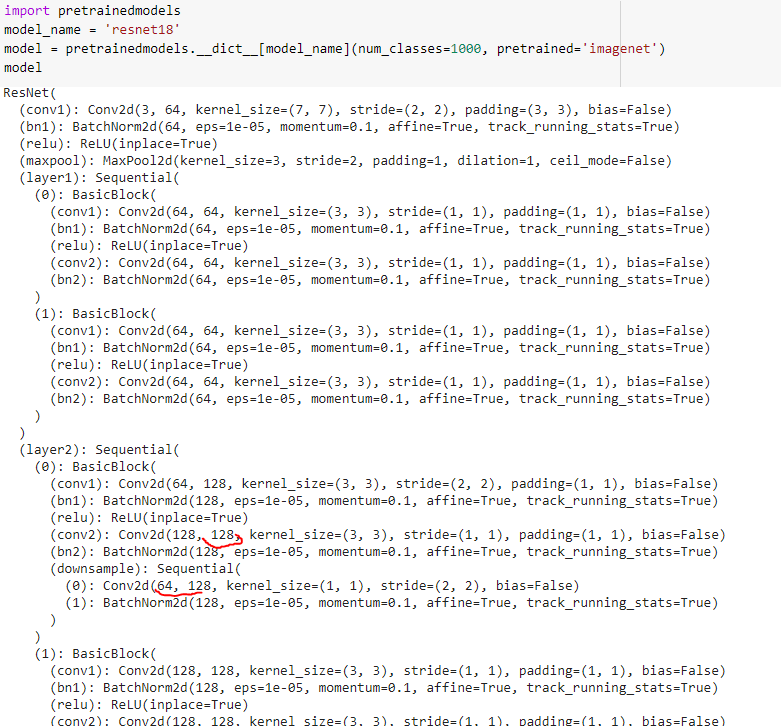

I am implementing resNet18 model by removing all the batch norm layers with Group Normalization layers.

Notebook Link

where I got this error Exception in device=TPU:0: Given groups=1, weight of size [64, 64, 3, 3], expected input[512, 192, 128, 128] to have 64 channels, but got 192 channels instead

return F.conv2d(x, weight, self.bias, self.stride ,self.padding) RuntimeError: Given groups=1, weight of size [64, 64, 3, 3], expected input[512, 192, 128, 128] to have 64 channels, but got 192 channels instead

weight standardization class

class conv2d(nn.Conv2d):

def __init__(self, in_channels, out_channels, kernel_size , stride , padding, bias=True):

super().__init__(in_channels, out_channels, kernel_size, stride , padding, bias)

def forward(self, x):

weight = self.weight

weight_mean = weight.mean(dim=1, keepdim=True).mean(dim=2,keepdim=True).mean(dim=3, keepdim=True)

weight = weight - weight_mean

std = weight.view(weight.size(0), -1).std(dim=1).view(-1, 1, 1, 1) + 1e-5

weight = weight / std.expand_as(weight)

return F.conv2d(x, weight, self.bias, self.stride ,self.padding) # Error is coming at this line

now creating the bottle-neck class for each resNet block in the architecture

class bottleNeck(nn.Module):

expansion = 3

def __init__(self , input_planes , planes , stride = 1 , dim_change = None , use_ws = True):

super(bottleNeck , self).__init__() # iherits the features of nn.Module

if use_ws:

my_conv = conv2d

else:

my_conv = nn.Conv2d

self.conv1 = my_conv(input_planes , planes , stride = 1 , kernel_size = 3 , padding = 1, bias=False)

self.gn1 = nn.GroupNorm(num_channels = planes , num_groups=32)

self.conv2 = my_conv(planes , planes * (self.expansion) , stride = 1 , kernel_size = 3 , padding = 1, bias=False)

self.gn2 = nn.GroupNorm(num_channels = (planes * (self.expansion)) , num_groups= 32)

self.dim_change = dim_change

def forward(self , x):

res = x

output = F.relu(self.gn1(self.conv1(x)))

output = self.gn2(self.conv2(output)) # we are not using activation funtion here , so as to check for dimension change is +nt or not

if self.dim_change is not None:

res = self.dim_change(res)

output += res

output = F.relu(output)

return output

Now implementing resNet Architecture.

class resNet_18X3(nn.Module):

def __init__(self , block , num_layers , classes = 100 , use_ws = True):

super(resNet_18X3 , self).__init__()

if use_ws:

my_conv = conv2d

else:

my_conv = nn.Conv2d

self.input_planes = 64

self.conv1 = my_conv(3 , 64 , stride = 1 , kernel_size = 3 , padding = 1)

self.gn1 = nn.GroupNorm(num_channels = 64 , num_groups=32)

self.layer1 = self._layer(block , 64 , num_layers[0] , stride = 1)

self.layer2 = self._layer(block , 128 , num_layers[1] , stride = 2)

self.layer3 = self._layer(block , 256 , num_layers[2] , stride = 2)

self.layer4 = self._layer(block , 512 , num_layers[3] , stride = 2)

self.averagePool = nn.AvgPool2d(stride = 1 , kernel_size = 4)

self.fc = nn.Linear(512 * block.expansion , classes)

def _layer(self , block , planes , num_layers , stride = 1):

dim_change = None # set to None by default

#condition when dimension change is not None

if stride != 1 or planes != self.input_planes * block.expansion :

dim_change = nn.Sequential(nn.Conv2d(self.input_planes , planes * block.expansion , kernel_size=1, stride = stride),

nn.GroupNorm( num_channels = (planes * (block.expansion)) , num_groups= 32 ) )

netLayers = []

netLayers.append(block(self.input_planes , planes , stride = stride , dim_change = dim_change))

self.input_channels = planes * block.expansion

for i in range(1 , num_layers):

netLayers.append(block(self.input_planes , planes))

self.input_planes = planes * block.expansion

return nn.Sequential(*netLayers)

def forward(self,x):

x = F.relu(self.gn1(self.conv1(x)))

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = F.AvgPool2d(x,4)

x = x.view(x.size(0), -1) #dimension change from 2 to 1

x = self.fc(x)

return x

model = resNet_18X3(bottleNeck , [2,2,2,2])