I may find where cause these problems.

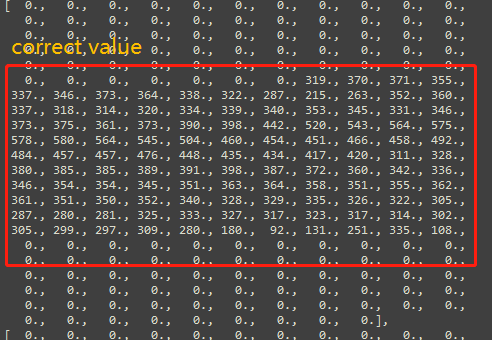

In pytorch, after transforming numpy to tensor, the image value doesn’t change expect int16 to float32:

test_data = np.asarray(nda[110]) # int16

test_data = torch.from_numpy(test_data)

test_data = torch.tensor(test_data,dtype=torch.float32) #to float32

test_data=torch.unsqueeze(test_data,0)

test_data=torch.unsqueeze(test_data,1)

But in libtorch, i implement the same progress with code:

torch::Tensor tImage = torch::from_blob(src.data, { 240,240,1 }, torch::kFloat32);

tImage = tImage.permute({ 2,0,1 });

tImage = tImage.div(255);

tImage = tImage.unsqueeze(0);

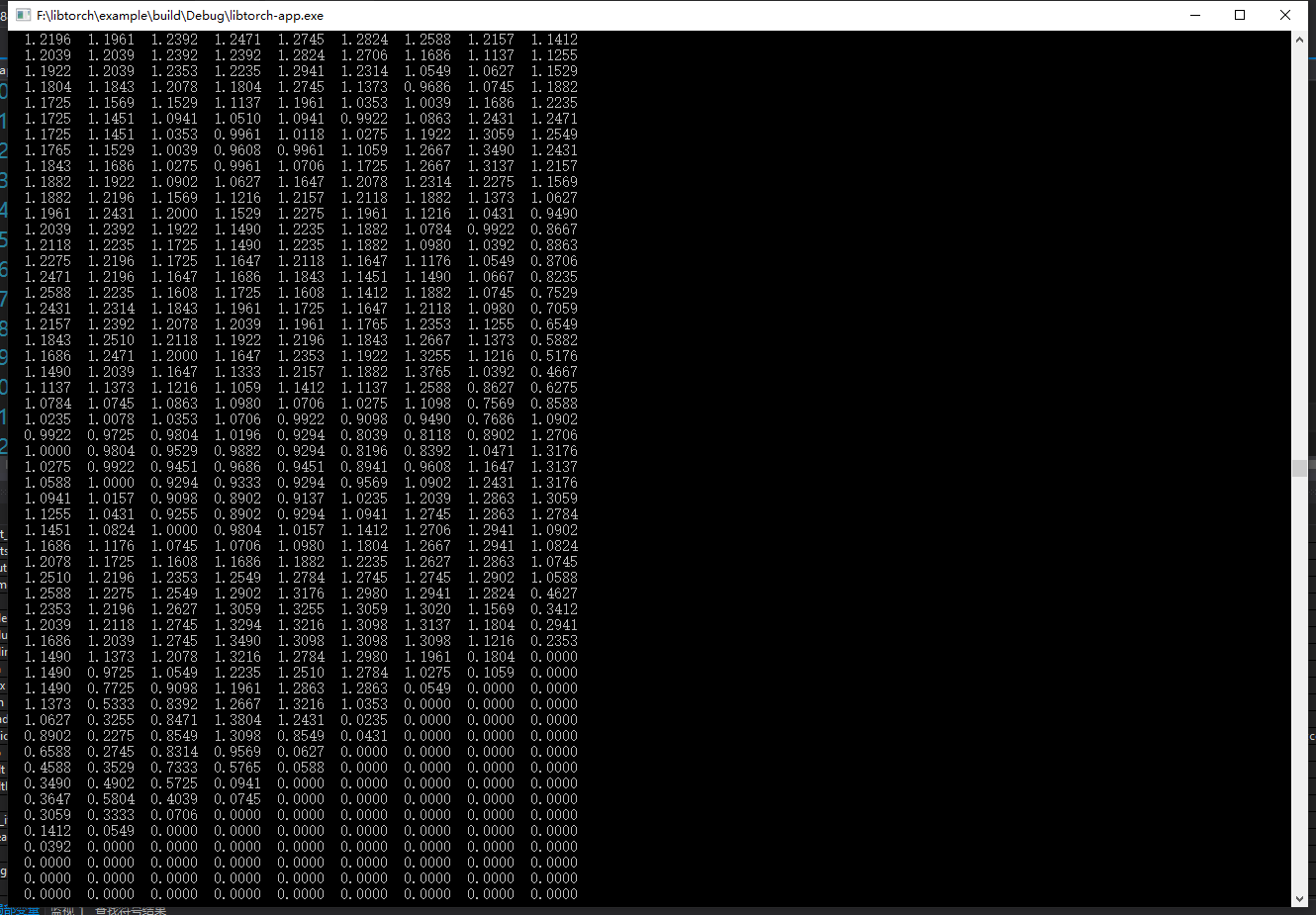

cout << "prob:" << tImage.data() << endl;

vector<torch::jit::IValue> inputs; //def an input

inputs.push_back(torch::ones({ 1,1,240,240 }).toType(torch::kFloat32));

and i get different values:

i don’t exactly know whether is the key point or not. i need help.

Edit: those C++ output vaules are the result of ‘torch.div(255)’. i think it is not the key point.