Hi,

I train a model about medical image processing。

Since the data is so big that data can not load to memory once. I divide the data to thousands of csv files, each csv file consist of one sample.

Here is the implement of my dataset:

import torch

import numpy as np

import torch.utils.data as data

import pandas

class Mydataset(data.Dataset):

def init(self, csvlist):

self.csvlist = csvlist

def __len__(self):

return len(self.csvlist)

def __getitem__(self,idx):

csvfile = self.csvlist[idx]

inputs = np.loadtxt(open(csvfile,'rb'),delimiter=",",skiprows=0)

inputs = torch.Tensor(inputs)

input1 = inputs[:35937].view(1,33,33,33)

input2 = inputs[35937:-1].view(1,33,33,33)

label = torch.zeros(1)

label[0] = inputs[-1]

return input1, input2, label

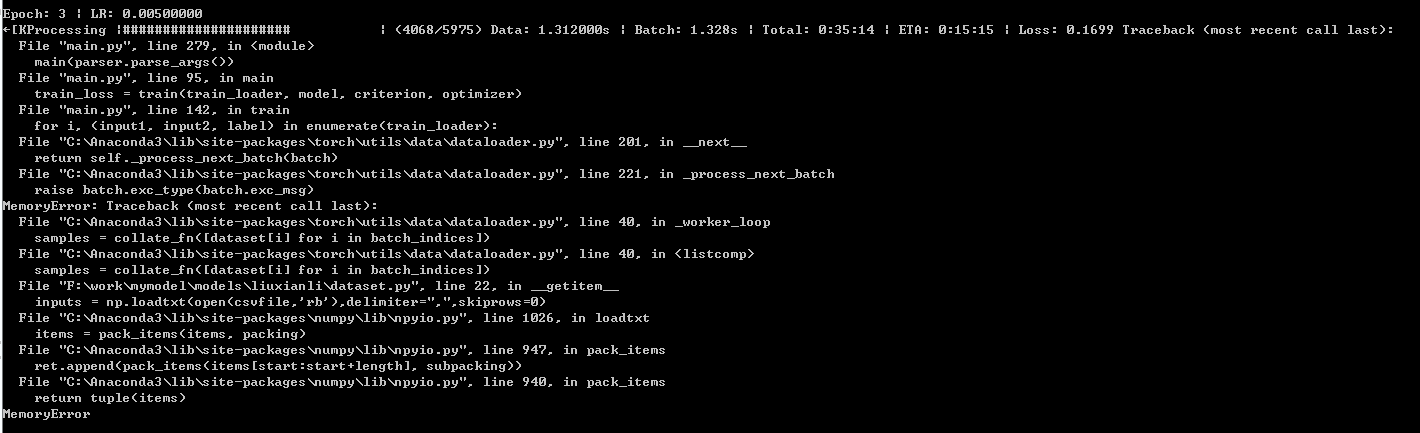

While in third epoch, the error occurs,

The model runs on Desktop with Intel i7-6700K CPU , GTX 1080Ti Graphic card and 16GB memory. Does anybody have an idea? Thanks for any help.