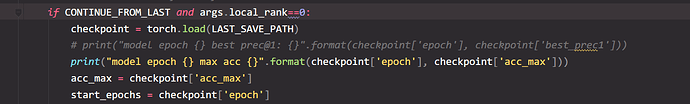

here is my mode, my problem is when i only load the pretrained params in rank=0 process, the other params such as ‘acc_max’ in checkpoint can’t be sysc cross the process,it seems only sysc the model weights cross ranks, so what should i do to make sure the all kinds of params saved in checkpoint will be loaded on all process.

(ps: if i remove the ‘args.local_rank==0’ judge, i watch the gpustats and see two process running on the gpu0. That case is not my desired. )

thanks bro!

Hi,

As I understand it you’d like to broadcast the value of acc_max to all ranks from rank 0?

In that case, you can simply convert it to a tensor and call dist.broadcast:

acc_max = checkpoint['acc_max']

acc_tensor = torch.zeros(1) if args.local_rank != 0 else torch.tensor([acc_max])

torch.distributed.broadcast(acc_tensor)

acc_max_from_rank_0 = acc_tensor.item()