I wrote this code for hierarchical RNN, which is not training at all. The model takes in 4 dimensional input for text based dialogue conversations,

[batch size, num of sentences, num of words, word embedding]

then i manipulate it in a way to resemble time distributed layer of keras

- [batch size* num of sentences, num of words, word embedding] → rnn

→ [batch size, num of sentences, hidden size (sentence embedding)]

then try to generate the next sentence in the in the conversation.

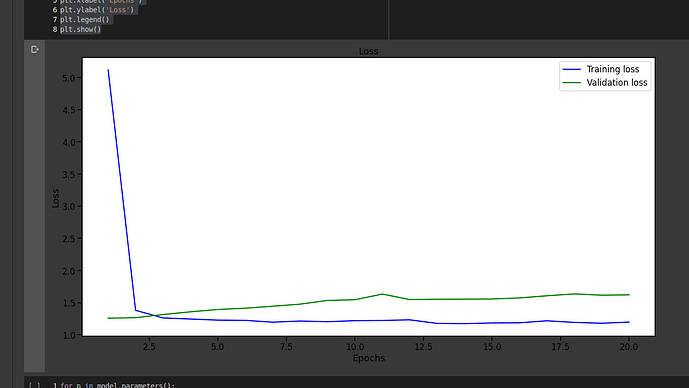

but what is happening is the loss is decreasing in the first epoch and then it stays constant the reason behind it is, when generating the words of the sentence I have the output dimension as the vocab size. but the the model is heavily predicting the 0th vocab as the predicted word everytime.

the figures are loss and val curve,

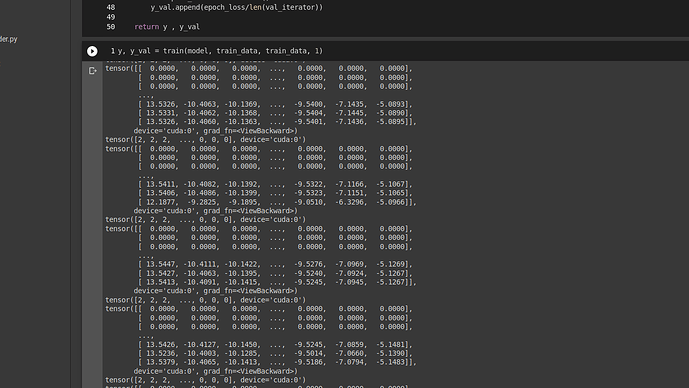

the outputs are - the output to the network and the target (after manipulating it in trg.view(-1, v.shape[-1]) for cross entropy loss )

can anyone help me with why is it not working?