I am using hooks to visualize how a filter and activation map look at each iteration. The filter looks fine and has non-zero initialization however the activation map corresponding to that filter has all 0’s. I dont know why it is not printing the activation values of convolving that filer. My model only has two neurons in layer 1.

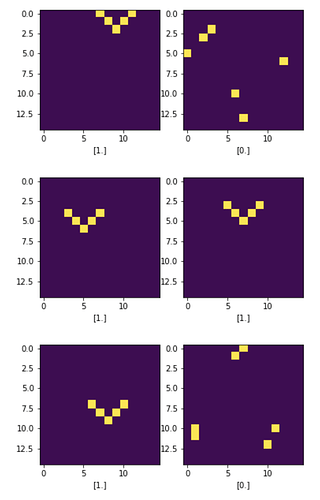

My dataset is simple classification with images like:

My hook:

def forward_hook(self, input,output):

fig=plt.figure(figsize=(9,45))

plt.subplot(1,4,1)

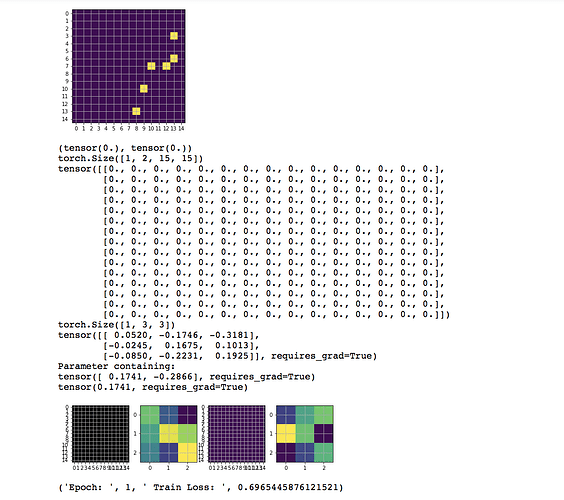

print(output[0][0][0].min(),output[0][0][0].max())

print(output[0][0][0])

plt.imshow(output[0][0][0].cpu().detach().numpy(),cmap='gray')

plt.xticks(np.arange(0, 15, 1.0))

plt.yticks(np.arange(0, 15, 1.0))

plt.grid(True)

plt.subplot(1,4,2)

print(model.conv1.weight[0].size())

print(model.conv1.weight[0][0])

plt.imshow(model.conv1.weight[0][0].cpu().detach().numpy())

plt.grid(True)

plt.subplot(1,4,3)

plt.imshow(output[0][0][1].cpu().detach().numpy())

plt.xticks(np.arange(0, 15, 1.0))

plt.yticks(np.arange(0, 15, 1.0))

plt.grid(True)

plt.subplot(1,4,4)

plt.imshow(model.conv1.weight[1][0].cpu().detach().numpy())

plt.grid(True)

plt.show()

Model:

class Conv(torch.nn.Module):

def __init__(self):

super(Conv,self).__init__()

self.conv1 = torch.nn.Conv2d(1, 2, kernel_size=(3,3), stride=1, padding=1)

self.conv2 = torch.nn.Conv2d(2, 1, kernel_size=3, stride=1, padding=1)

self.fc1 = torch.nn.Linear(1*15*15, 1)

def forward(self, x):

x = (self.conv1(x))

x = (self.conv2(x))

x=x.view(batch_size,-1)

x=F.relu(self.fc1(x))

return x

Output:

Top is the input image, and its min and max, the big array output is output[0][0][0] or the activation map of filter 1. Below that is the 3*3 filter 1 values and below that the two biases. Then the visualization of activation map 1, filter 1, activation map 2, filter 2

As you can see it makes no sense for the activation map to be all 0’s the dot product must have real values. Why are they not showing? I have done this many times and sometimes I get some values but then the disappear and it is inconsistent