I have an array of features. I want to have output class labels for each of the features

inside the array.

The example features array is as below:

features_arr = array([feature1], [feature2], [feature3])

In this example each of the features inside the features_arr (eg. feature1,etc.) is of

dimension (1x256). How can I get outputs in such a way that features_arr[0] belongs to

a specific class. (eg. feature1 belongs to class 1, feature 2 belongs to other class).

It should be mentioned that there are some spatial relationships among the features. Thats

why I want to pass features_arr to a neural network to learn the spatial relationships

to output the class labels for each of the features.

output example = array(0,1,0)

Thanks in advance.

If I understand your use case correctly, you would like to use sequential data where each timestep belongs to one specific class?

If so, then you could indeed pass the input data as [batch_size, seq_len, nb_features] to a model (the actual shape depends on the used modules) and use a corresponding target in the shape [batch_size, seq_len].

Here is a small code snippet overfitting a random dataset in this shape:

batch_size, seq_len, nb_features = 10, 20, 256

features_arr = torch.randn(batch_size, seq_len, nb_features)

nb_classes = 5

target = torch.randint(0, nb_classes, (batch_size, seq_len))

model = nn.Linear(nb_features, nb_classes)

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3)

criterion = torch.nn.CrossEntropyLoss()

for epoch in range(1000):

optimizer.zero_grad()

output = model(features_arr)

output = output.permute(0, 2, 1)

loss = criterion(output, target)

loss.backward()

optimizer.step()

print('epoch {}, loss {:.3f}'.format(epoch, loss.item()))

print('predictions {}\ntarget {}'.format(torch.argmax(output, dim=1), target))

@ptrblck Thank you for you response.

My use case is not exactly a sequential data.

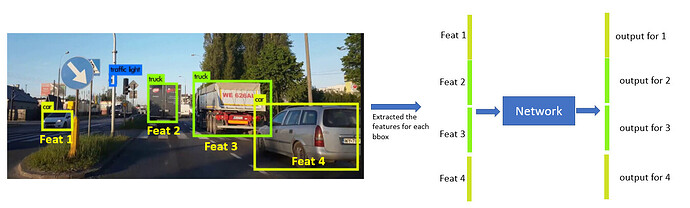

I have features corresponding to different detected objects in an image. I want to classify each of the detected objects based on the features and also want to take into account the spatial relationships of different objects to get the classification decision.

I tried to explain my need in the following figure:

Again many thanks.

I think this use case would also work using my code snippet as the “sequence” would be the number of objects. Note however that my code snippet treats each object (or time step) independently, which doesn’t seem to fit:

I don’t know what the best approach for this would be.

Thank you very much!

With regards to taking into account the spatial relationships of different objects, you could embed the center coordinates of each bounding box as additional channels in each corresponding feature vector, and then hope that you find a network design that can learn to handle these channels properly in conjunction with traditional feature channels.

@Andrei_Cristea Thank you for your suggestion. Intuitively, your suggested method could be a reasonable solution.