there are two features from two CNN, and they share the same parameters, in my case, its shape of <128, 764>. I want to add dot-product attention on them, how can I implement them in PyTorch.

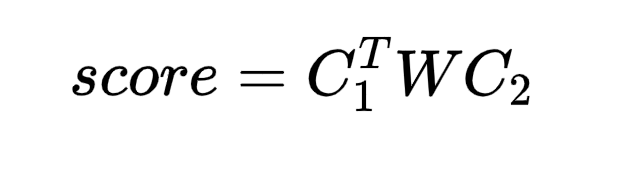

below picture contains my attention score function:

and W denotes the learning parameters.