@ptrblck I have been trying to do augmentation with no luck.

This is the snippet for custom_dataset

class CustomDataset(Dataset):

def __init__(self, image_paths, target_paths, transform_images):

self.image_paths = image_paths

self.target_paths = target_paths

self.transformm = transforms.Compose([tf.rotate(10),

tf.affine(0.2,0.2)])

self.transform = transforms.ToTensor()

self.transform_images = transform_images

self.mapping = {

0: 0,

255: 1

}

def mask_to_class(self, mask):

for k in self.mapping:

mask[mask==k] = self.mapping[k]

return mask

def __getitem__(self, index):

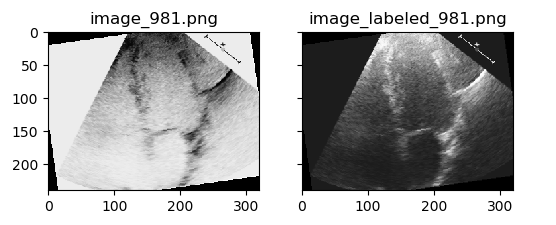

image = Image.open(self.image_paths[index])

mask = Image.open(self.target_paths[index])

t_image = image.convert('L')

t_image = self.transforms(t_image) # transform to tensor

if any([img in image for img in self.transform_images]):

t_image = self.transformm(t_image) #augmentation

mask = torch.from_numpy(numpy.array(mask, dtype=numpy.uint8))

mask = self.mask_to_class(mask)

mask = mask.long()

return t_image, mask, self.image_paths[index], self.target_paths[index]

def __len__(self):

return len(self.image_paths)

and here is there snippet for splitting the dataset and define dataloaders

from custom_dataset import CustomDataset

folder_data = glob.glob("F:\\my_data\\imagesResized\\*.png")

folder_mask = glob.glob("F:\\my_data\\labelsResized\\*.png")

folder_data.sort(key = len)

folder_mask.sort(key = len)

#print(folder_data)

len_data = len(folder_data)

print("count of dataset: ", len_data)

print(80 * '_')

test_image_paths = folder_data[794:] #793

print("count of test images is: ", len(test_image_paths))

test_mask_paths = folder_mask[794:]

print("count of test mask is: ", len(test_mask_paths))

assert len(folder_data) == len(folder_mask)

indices = list(range(len(folder_data)))

#print(indices)

random.shuffle(indices)

#print(indices)

indices.copy()

#print(70 * '_')

#print(indices)

image_indices = [folder_data[i] for i in indices]

mask_indices = [folder_mask[i] for i in indices]

#print(mask_indices)

split_1 = int(0.6 * len(image_indices))

split_2 = int(0.8 * len(image_indices)+1)

train_image_paths = image_indices[:split_1]

print("count of training images is: ", len(train_image_paths))

train_mask_paths = mask_indices[:split_1]

print("count of training mask is: ", len(train_image_paths))

valid_image_paths = image_indices[split_1:split_2]

print("count of validation image is: ", len(valid_image_paths))

valid_mask_paths = mask_indices[split_1:split_2]

print("count of validation mask is: ", len(valid_image_paths))

#print(valid_mask_paths)

print(80* '_')

print(valid_image_paths)

transform_images = glob.glob("F:\\my_data\\imagesResized\\P164_ES_1.png")

#transform_images = list(folder_data['P164_ES_1', 'P164_ES_2','P164_ES_3','P165_ED_1',

# 'P165_ED_2', 'P165_ED_3', 'P165_ES_1', 'P165_ES_2','P165_ES_3',

# 'P166_ED_1', 'P166_ED_2'])

train_dataset = CustomDataset(train_image_paths, train_mask_paths, transform_images)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=1, shuffle=True, num_workers=2)

valid_dataset = CustomDataset(valid_image_paths, valid_mask_paths, transform_images)

valid_loader = torch.utils.data.DataLoader(valid_dataset, batch_size=1, shuffle=True, num_workers=2)

test_dataset = CustomDataset(test_image_paths, test_mask_paths, transform_images)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=1, shuffle=False, num_workers=2)

dataLoaders = {

'train': train_loader,

'valid': valid_loader,

'test': test_loader,

}

in the second snippet transform_images = list(folder_data['P164_ES_1', 'P164_ES_2','P164_ES_3','P165_ED_1', 'P165_ED_2', 'P165_ED_3', 'P165_ES_1', 'P165_ES_2','P165_ES_3', 'P166_ED_1', 'P166_ED_2']) is list of image name that I need to augment.

I really appreciate if you point me to the right direction. at the moment is giving an error __init__() takes 3 positional arguments but 4 were given

I also don’t have any idea how can I do it for mask of images (my task is segmentation)