I have to carry out a lot of matrics mulitiplication at same time. It is a part of my own neural network.

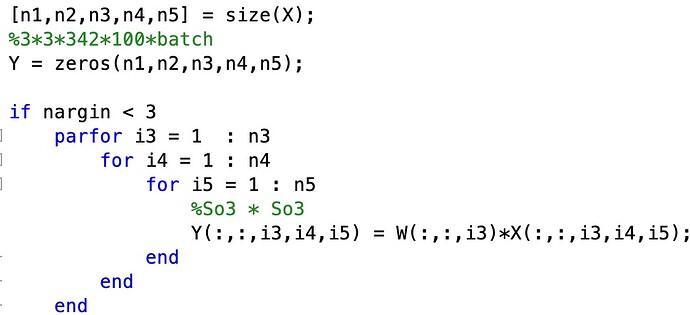

On matlab, i can do things like this:

I can just use the keyword parfor

However, when it comes to pytorch, anthing becomes different.

I implemented the same code like this:

def forward(self, x):

"""

:param x: train or test with the dimension of [N ,D_in, D_in, num, frame]

:return:

"""

for i in range(x.shape[0]):

for j in range(x.shape[4]):

for z in range(x.shape[3]):

x[i, :, :, z, j] = self.w[:, :, z].mm(x[i, :, :, z, j])

return x

It is every slow!!

I cannot come up with any idea to accelerate this process.

Could you give me a hand? I will appreciate it!