I’m training ImageNet on ResNet-50 architecture using Fastai v1 1.0.60dev (Pytorch 1.3.0). There are substantial speed gains using Mixed Precision Training since I can effectively use 4 times the batch size (= 512) thanks to reduction in VRAM consumption and using smaller size of 224. The problem is I am unable to select a good learning rate. I am using fastai’s lr_finder with SGD but the suggested lr causes huge overfitting. Diving the suggested lr by 100 just overfits a bit while lr divided by 512 seems to be okish but slow.

While these guesses work, I’m not sure how to choose a good learning rate in general. I thought about using APEX but the dynamic loss scaling seems to be integrated in the learn.to_fp16(). Training is done using learn.fit_one_cycle() ie 1-cycle policy. I think everything else is working fine.

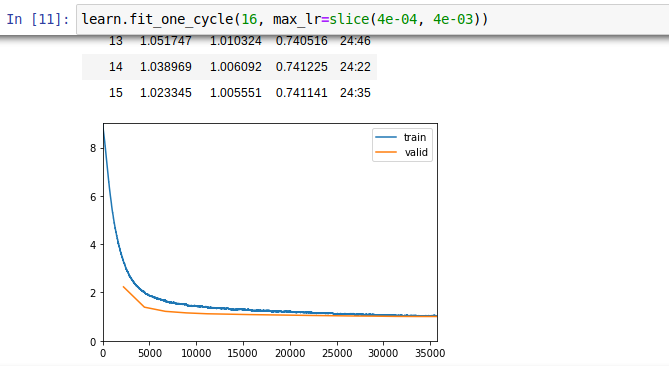

Below is the image for lr divided by 100

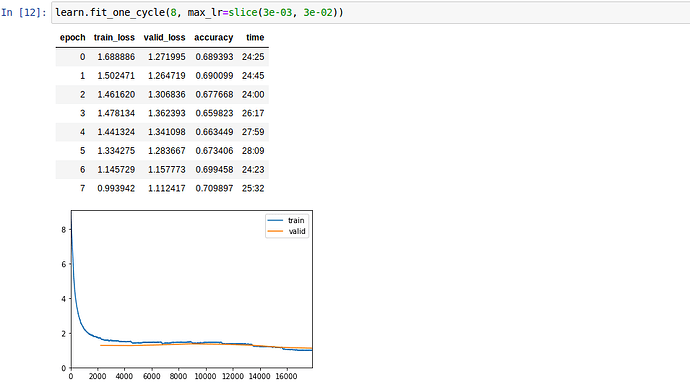

And this is for lr divided by 512