Hi,

My goal is to do back propagation to a Transformer Block layer.

My goal: loss = ||f_{\Theta + \Delta P}(X) - Y||^2 + ||\Delta P||^2

For context, the usual loss: loss = || f_{\Theta + \Delta}(X) - Y ||^2?

The problem is I don’t know how to do ||f_{\Theta + \Delta}(X) - Y||^2 or regularize the ||\Delta P||^2

What I did:

- After

loss.backward(), I do param.grad = torch.matmul(param.grad, P). However, I believe this is not correct. For example, even though after loss.backward() the param.grad is accessible, how I can compute the regularization loss ||\Delta P||^2?

It looks like you want to add the L2 regularisation. There are many ways of achieving it. Assuming that you are using a simple SGD optimizer, I recommend you set weight_decay to some non-zero value while initializing the optimizer. For example,

optimizer = optim.SGD(model.parameters(), lr=0.01, weight_decay=1e-4)

This will automatically apply L2 penalty while updating the parameters. Of course, you can use any optimizer of your choice from pytorch.

I want the neural network to update only along the subspace defined by P

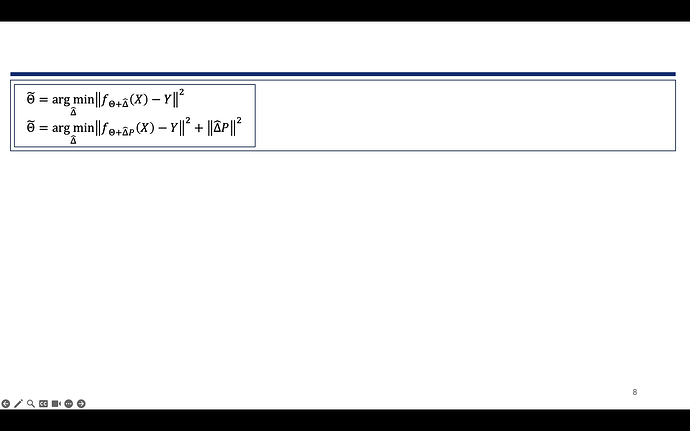

〖〖Θ ̃=(arg min)_Δ ̂ 〗‖f_(Θ+Δ ̂ ) (X)-Y‖〗^2

〖Θ ̃=(arg min)┬Δ ̂ 〗〖‖f_(Θ+Δ ̂P) (X)-Y‖^2+‖Δ ̂P‖^2 〗