I want to transfer the kernel parameters in each iteration into the regularization term part. I use the following code.

model=MNISTnet()

print(model.state_dict())

for i, j in model.named_parameters():

print(i)

print(j[0][0][0])

break

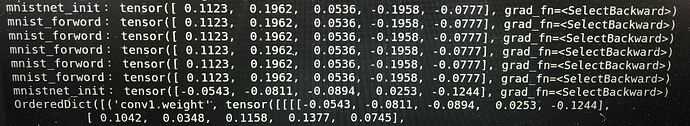

The result is shown above. I found that the named_parameters() function get different kernel parameters. The last 2 weights are results of named_parameters() function. I don’t know why they get parameters by re-initialize the kernel rather than the training parameters in each iteration. Please give me some suggestions about this problem, thank you