Hello everybody,

I’m trying to reduce the inference of my system by running two halves of the model in parallel. I’m using DETR object detection.

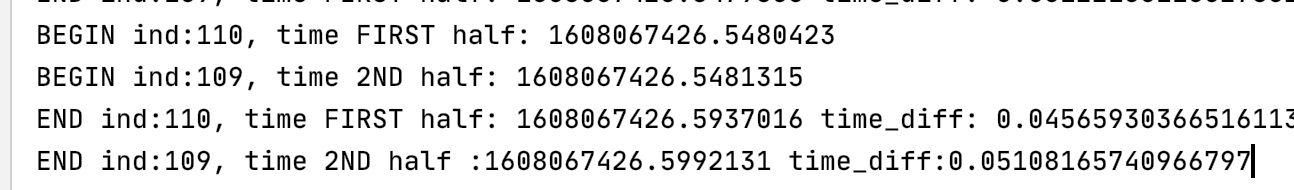

Basically, I have two threads running in parallel, one runs the backbone and another one runs the detection part of the network. However in order for this to work, I need to store the feature map resulting from the backbone on the previous iteration. This is highly increasing my memory usage by 4 times. I don’t understand why this is 4 times higher, In my understanding it should only be two times higher, since at some point I will have both the tensors from the previous iteration and the tensors from the current iteration.

I’m printing the memory allocated (2178970624) and the memory reserved (4309647360), and there is a two times difference between them.

Here is some of the code (If some part of the code is missing it is because i had to remove a lot of lines to post it here)

def run_backbone2(model,data_queue, backbone_results_queue):

while True:

samples, ind, targets = data_queue.get(block=True)

src,mask, pos = model.run_backbone(samples)

del samples

# torch.cuda.empty_cache()

# backbone_results_queue.put((src.cpu(),mask.cpu(), pos.cpu(), ind, targets))

backbone_results_queue.put((src, mask, pos, ind, targets))

def run_detection2(model, backbone_results_queue,outputs_queue):

while True:

src,mask, pos, ind, targets = backbone_results_queue.get(block=True)

# outputs = model.run_detection(src.cuda(),mask.cuda(), pos.cuda())

outputs = model.run_detection(src, mask, pos)

del src

del mask

del pos

# torch.cuda.empty_cache()

outputs_queue.put((outputs, ind, targets))

@torch.no_grad()

def evaluate_parallel(model, criterion, postprocessors, dataset, device, output_dir,

virtual_epoch_len=100):

model.cuda()

model.eval()

criterion.eval()

data_queue = Queue(1)

backbone_results_queue = Queue(1)

output_queue = Queue(1)

backbone_process = threading.Thread(target= run_backbone2, args=(model,data_queue,

backbone_results_queue))

backbone_process.start()

detection_process = threading.Thread(target=run_detection2,

args=(model, backbone_results_queue, output_queue))

detection_process.start()

for _ind in trange(virtual_epoch_len, desc='COCO evaluation: '):

samples, _targets = dataset.__getitem__(_ind)

samples = samples.unsqueeze(0)

samples = samples.to(device)

if _ind > 0:

outputs, ind, targets = output_queue.get(block = True)

del outputs

_targets = [_targets]

_targets = [{k: v.to(device) for k, v in t.items()} for t in _targets]

data_queue.put((samples, _ind, _targets))

print(torch.cuda.memory_allocated())

print(torch.cuda.memory_reserved())