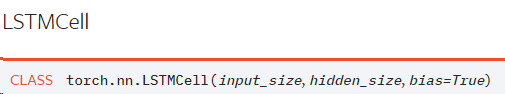

In RNN, GRU and LSTM, I seem to only have control over the hidden state size, which will also dictate output size. Isn’t this a limitation? It seems to me that with this setup, the complexity of the NN is dictated by output size. My question is: is there any way to make things more flexible than those args: