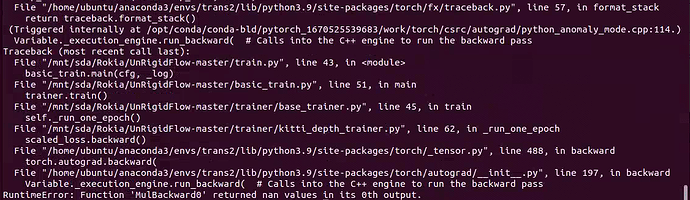

For a specific neural network that is designed for supervised learning stereo matching (stereo matching or disparity estimation is the process of finding the pixels in the multiscopic views that correspond to the same 3D point in the scene**), I am trying to change the supervised losses to unsupervised losses using the same network architecture. These unsupervised losses have been used before for the same task but with different network architectures. For the first training trail, my code gives Nan values after a few epochs. “I used torch.autograd.set_detect_anomaly(True)”

could anyone guide me how to choose the appropriate loss parameters or learning rates, I reduced the learning rate from 0.0001 to 0.00001 which allows for more training epochs but it also gives nan value. could anyone explain the relationship between loss parameters, learning rate and Nan value in loss and if small numbers or large numbers are better and why, thank you so much in advance

When you encounter NaN values during training, it’s usually a sign that something is going wrong in the optimization process. NaN values can be a result of exploding gradients, vanishing gradients, or instability in the loss function itself. Here are some tips

- Set appropriate loss parameters to ensure stability and convergence during training.

- Choose a suitable learning rate to avoid overshooting or slow convergence; consider using learning rate schedulers.

- Apply gradient clipping to limit gradient magnitudes and prevent exploding gradients.

- Use batch normalization layers to improve training stability.

- Ensure unsupervised loss functions are numerically stable to avoid NaN or infinity values.

- Initialize weights properly using techniques like Xavier or He initialization.

1 Like

Thank you so much

it was an exploding gradient and I have used gradient cropping to solve it.

but I just still have one problem, the loss reached a specific loss value, which is still high, and stops decreasing, The original baseline can achieve much lower loss, the current network architecture is much better but with the unsupervised losses it doesn’t give a good results.

Is there any point that could help me solve that

thank you so much in advance