Hi all,

I am trying to train the autoencoder model that consists of two sub-encoders and one generic decoder (not concatenation!)

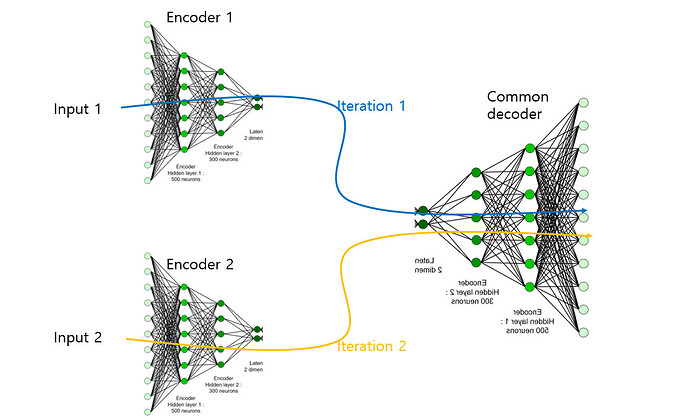

please see the attached figure.

Would the code below work? or please advise me how to train this!.

# Define individual modules

encoder1 = torch.nn.Linear(10, 4).to(device)

encoder2 = torch.nn.Linear(10, 4).to(device)

decoder = torch.nn.Linear(4, 10).to(device)

# Define each model

model1 = nn.Sequential(encoder1, decoder)

model2 = nn.Sequential(encoder2, decoder)

# Optimizer

optim_1 = torch.optim.Adam(model1 .parameters(), lr=learning_rate)

optim_2 = torch.optim.Adam(model2.parameters(), lr=learning_rate)

# Train

for epoch in range(epochs):

model1.train()

model2.train()

for i, data in enumerate(train_loader):

input1, output1 = data[:5]

input2, output2 = data[5:]

model1.zero_grad()

pred = model1(input1)

loss_1 = criterion(pred , output1)

loss_1 .backward()

model2.zero_grad()

pred = model2(input2)

loss_2 = criterion(pred , output2)

loss_2.backward()