I use a simple Classification CNN to realize multiple Bi-classification task. My output shape of model like

[10,2]. How can I use loss?

Here, is 10 the number of bi-classifications ?

If it is :

Since it is bi-classification, you could instead get an output shape of [10, 1] and use nn.BCEWithLogitsLoss. It combines Sigmoid + BinaryCrossEntropy (so do not use a sigmoid activation just before that).

For the multiple classification aspect I suppose your output shape is [batch_size, 10, 1], and your label shape is also [batch_size, 10, 1] filled with ones and zeros. The loss function by default will average over the 10 different tasks, which is probably what you want.

Thank you! Is it better than mse?

Yes I’ve always heard that for classification it was better than MSE, but I have nothing to back it up.

If I’m not mistaken, the loss for a classification task using nn.MSELoss will be scaled down the closer the output gets to the target, which might slow down the training towards the end.

@rasbt derives the formula here and you can find some additional information in this blog post (at the end).

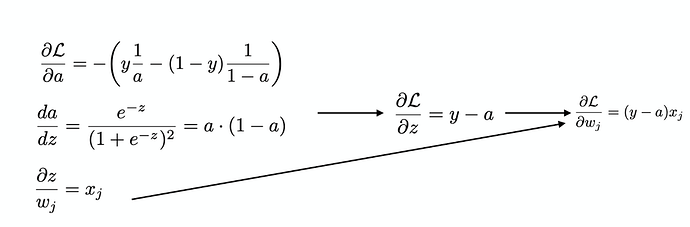

Both are fine. Empirically, the cross tends to result in better performance of the resulting models though. Also, the the derivative/gradient calculation plays nicely with sigmoid or softmax in the last layer, i.e., it simplifies as follows (where “a” is the sigmoid activation, y is the true label, and z is the weighted input w^T x):

Thanks for the info !