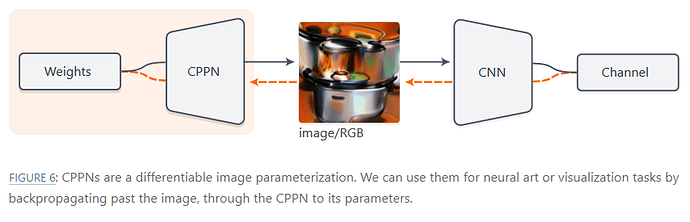

According to this post using TensorFlow (code here), you can use a simple Compositional Pattern Producing Network (CPPN) as an input parameter for a classification network. By using the CPPN as the input parameter, you can create really smooth looking visualizations based on neuron activation.

I tried using the following in PyTorch, and while the loss seems to go down, nothing else changes:

optimizer = optim.Adam([

{'params': cppn_net.parameters()}], lr = learning_rate) # Replaced image tensor

This is what cppn_net looks like:

import torch

import torch.nn as nn

def cppn_normal(l):

if type(l) == nn.Linear:

nn.init.normal_(l.weight)

# Regular CPPN

class CPPN(nn.Module):

def __init__(self, size, input_range=1, num_channels=16, num_layers=6, activ_func=nn.Tanh()):

super(CPPN, self).__init__()

if type(size) is not tuple:

size = (size, size)

self.input_size = size

self.input_range = input_range

self.net = nn.Sequential()

self.net.add_module(str(len(self.net)), nn.Linear(2, num_channels, bias=True))

self.net.add_module(str(len(self.net)), activ_func)

for l in range(num_layers - 1):

self.net.add_module(str(len(self.net)), nn.Linear(num_channels, num_channels, bias=False))

self.net.add_module(str(len(self.net)), activ_func)

self.net.add_module(str(len(self.net)), nn.Linear(num_channels, 3, bias=False))

self.net.add_module(str(len(self.net)), nn.Sigmoid())

def create_input(self):

w = torch.arange(0, self.input_size[1])

h = torch.arange(0, self.input_size[0])

w_exp = w.unsqueeze(1).expand((self.input_size[1], self.input_size[0])).true_divide(self.input_size[0]) - 0.5

h_exp = h.unsqueeze(0).expand((self.input_size[1], self.input_size[0])).true_divide(self.input_size[1]) - 0.5

return torch.stack((w_exp, h_exp), -1).reshape(self.input_size[1] * self.input_size[0], 2)

def forward(self):

input = self.create_input()

input.requires_grad= True

return (self.net(input).reshape(self.input_size[1], self.input_size[0], 3).permute(2,1,0) * self.input_range).unsqueeze(0)

cppn_net = CPPN(size=(512, 512)).apply(cppn_normal)

I’m not sure if I need to setup the CPPN network differently, change how I’m integrating it with the main network, or do something else entirely?