Could you please describe the issue in detail and in the best case post a minimal, executable code snippet which reproduces the error, so that we could directly debug it?

You can post code snippets by wrapping them into three backticks ```.

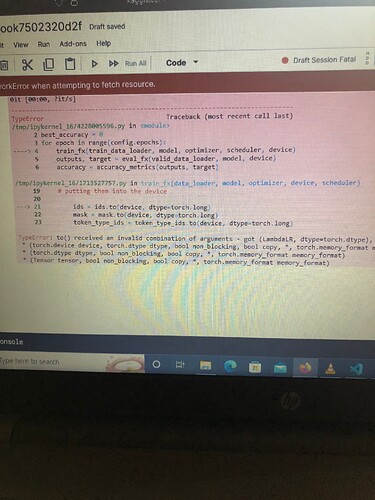

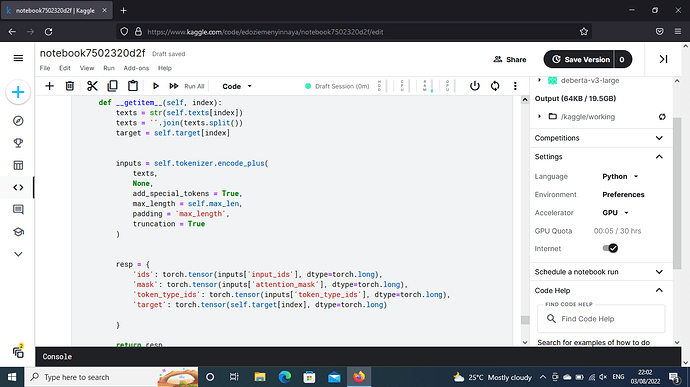

I tried training the model, because I used Debertav3large, and the error was cuda was out of memory, I reduced the batch size and also changed some parameters, but still the same thing?

Based on your screenshot it seems you are passing a LambdaLR object as the device argument to the to() operation and your topic also mentions issues on the CPU?

Could you clarify the issue a bit more, please?

Can I send in screenshot

No, please don’t post screenshots as they are not helpful.

Describe your issue in words and post code snippets by wrapping them into three backticks ``` as previously mentioned.

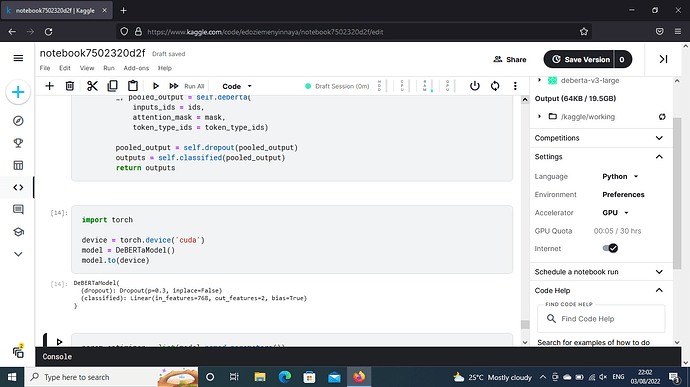

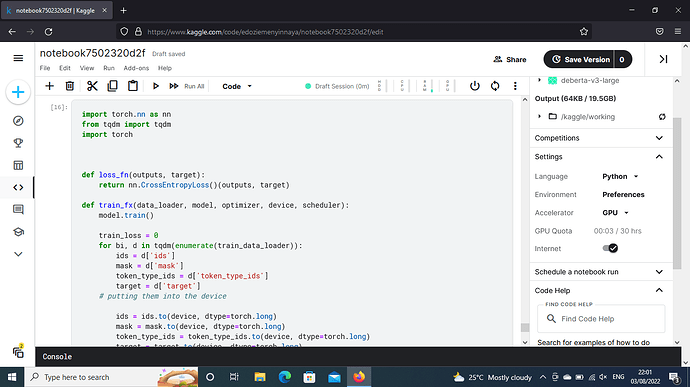

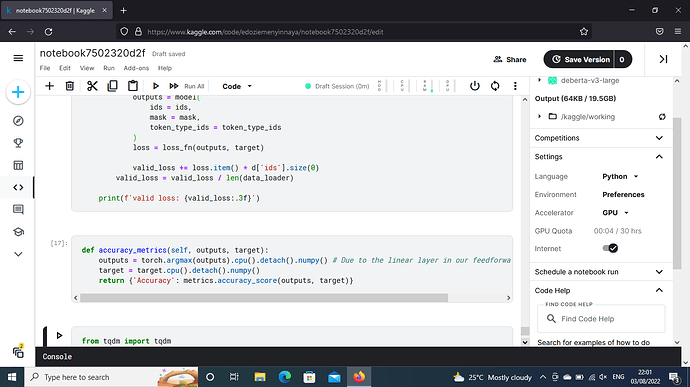

I noticed that I didn’t place in the model which I’m using ( debertav2large) in the init function when declaring the variables, it worked afterwards, but to say the issues I ran into when training my model was that: cuda was out of memory, that’s the point I’m stuck when training, so I need assistance there?

Make sure that all your tensors and model are on the same device. Also make sure that batches on data fit into the GPU memory.

I think the model is too large for only one gpu used in kaggle

You could try smaller batch size.

Thanks, and maybe I’ll try smaller models too

May be kaggle is ineeficient for this model tonuse with cpu because maybe its a vast model you could go with the gpu because gpu is paralleli computed the value or use smller batch size its totally depend on you

I used GPU on this one and my batch size for training and validation is 16, 8 respectively. So I don’t know if I’m to lower it or something else?