loss = F.binary_cross_entropy(same_mask, same_mask, reduction='elementwise_mean')

Then I got this: tensor(0.4984), which I think it should be zero.

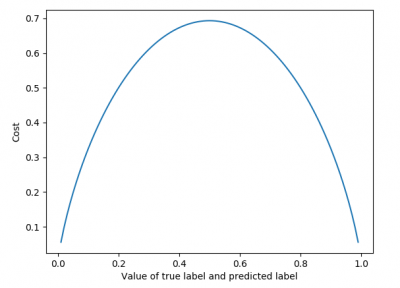

Binary cross entropy is never zero except the pairs of inputs are both 1 (or very close to zero) because binary cross entropy (i.e. negative log likelihood) is “p * log(q)” . I made a plot here for a pair of inputs where input=output to illustrate this:

import torch

fig = plt.figure()

def bce(y_pred, y_true):

y_true_v = torch.tensor([y_true]))

y_pred_v = torch.tensor([y_pred]))

return torch.nn.functional.binary_cross_entropy(y_pred_v, y_true_v, size_average=False).numpy()

x_axis_values = np.arange(0.01, 1.0, 0.01)

y = [bce(a, b) for a,b in zip(x_axis_values, x_axis_values)]

print(np.mean(y))

plt.plot(x_axis_values, y)

plt.ylabel('Cost')

plt.xlabel('Value of true label and predicted label')

plt.show()

may I ask why you use the functional version instead of the nn version?

There is nothing against that, really. I use the functional versions all the time for things that don’t store states/paramaters. In this case, also both would produce the exact same results.