Hi.

I have been working in a vanilla siamese network in which, given an input image:

- I generate two different transformations for that same image

- Forward pass both images through the network

- Invert the transformations

- Get the mean of both outputs and compute the loss.

The code looks like this:

output_1 = model(img_1)[0]

output_2 = model(img_2)[0]

# Undo transformations

output_reset_1 = F_vision.affine(output_1,**inverse_params_1)

output_reset_2 = F_vision.affine(output_2,**inverse_params_2)

# Average both outputs

final_output = (output_reset_1 + output_reset_2)/2

# Compute loss with respect to ground truth heatmaps

loss = criterion(final_output, heatmap_self.float())

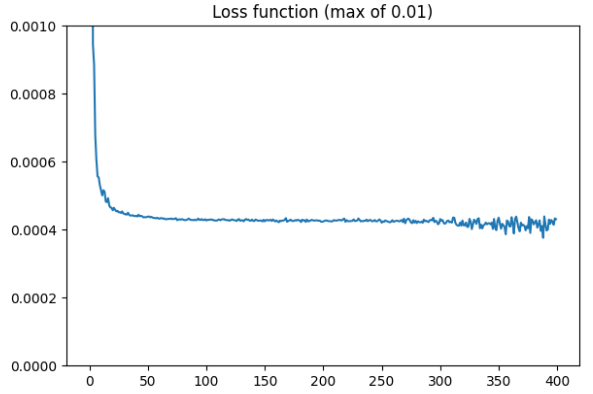

I plot the loss function obtained below. As it can be seen, it soon flattens out and it stays there.

I would highly appreciate any feedback or insights on what the problem may be.

NOTE: The network without this vanilla ‘Siamese learning’ yields a much lower error with the same num of epochs

Thanks!!