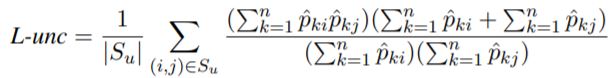

This is the loss function i want to implement:

def corr_basic_calc(weights, y_pred):

num_classes = len(weights[:,0]) m_c = [] sum_m_c = [] for i in range(num_classes): m_c.append(y_pred[:,i]) sum_m_c.append(torch.sum(m_c[i])) return num_classes, torch.tensor(m_c,dtype=torch.long), torch.tensor(sum_m_c,dtype=torch.long)

Blockquote

def loss_fn(outputs,targets,weights,uncorrelated_c_pairs):

num_classes, m_c, sum_m_c = corr_basic_calc(weights,outputs)

corr_loss=0

for uncorr_c_pair in uncorrelated_c_pairs:

c1, c2, v = uncorr_c_pair corr_loss += torch.abs(torch.sum(m_c[c1]*m_c[c2])/sum_m_c[c1]-v)return torch.mean(((weights[:,0]*(1-targets))+(weights[:,1]*targets))torch.nn.BCEWithLogitsLoss()(outputs, targets),axis=1)+0.05corr_loss/len(uncorrelated_c_pairs)

But m getting this error:

in corr_basic_calc(weights, y_pred)

6 m_c.append(y_pred[:,i])

7 sum_m_c.append(torch.sum(m_c[i]))

----> 8 return num_classes, torch.tensor(m_c,dtype=torch.long), torch.tensor(sum_m_c,dtype=torch.long)

ValueError: only one element tensors can be converted to Python scalars

Can you help me solve it?