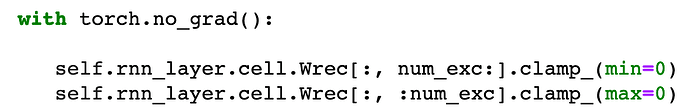

Hello, I am working on constraining my weights in an RNN model. My goal is that a portion of my weights will be strictly positive, and a portion strictly negative. I looked around on the forums, and it seems that the recommended approach to doing so is the following. I execute this code after my optimizer step.

However, doing so leads my loss values to turn to NaN. The interesting thing is if I modify my weights using another method (e.g. the tanh function or multiplying by negative 1), then the model trains without any issues. I’m not sure how I should move forward, and any help would be appreciated.