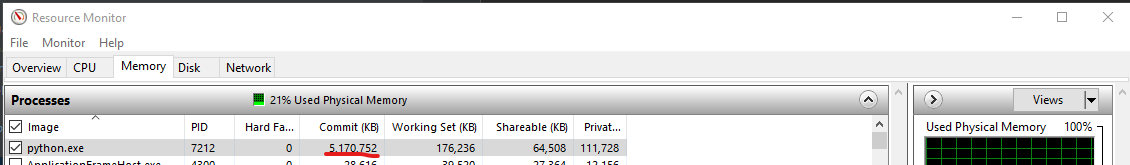

When pytorch loads the cuda libraries into ram it use a huge chunk of memory. I am using multiple process. Only some of the processes need the cuda libraries (most can use the cpu). Is there a way to “import torch” without loading the cuda libraries on some processes and load the cuda libraries for others?

Do you mean the host or device memory?

I’m not sure how the host memory behaves, but the device memory shouldn’t be used, if you are not calling any CUDA operations in your script.