I have some unusual behavior when I train my Multi-Label Classifier using BCE_Logits_Loss with the pos_weights calculated using the following function.

def calculate_pos_weights(class_counts):

pos_weights = np.ones_like(class_counts)

neg_counts = [len(data)-pos_count for pos_count in class_counts]

print(neg_counts)

for cdx, (pos_count, neg_count) in enumerate(zip(class_counts, neg_counts)):

pos_weights[cdx] = neg_count / (pos_count + 1e-5)

print(pos_weights)

return torch.as_tensor(pos_weights, dtype=torch.float)

criterion = nn.BCEWithLogitsLoss(pos_weight=calculate_pos_weights(weight_balance).to(device))

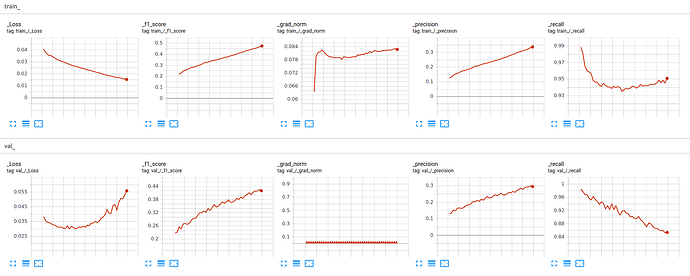

Notice how the Recall starts at 1 and is reducing while precision is increasing (therefore improving the F1 score), but the loss is going up, indicating that the model is over-fitting.

When I train this model without the pos_weight I do not have this issue but I believe I can get better performance with a weighted loss.

Can anyone find and explain the issue?

Thanks!