Thanks for reply

Actually, I’m very new to this field.

I received this deep learning a year ago and that is work well at that time,

But there is an error when I try it again this time.

Wouldn’t this error be caused by a function change due to the update of the package?

Because I run this script at colab…

'''

trainer.py

Train 3dgan models

'''

import torch

from torch import optim

from torch import nn

from utils import *

import os

from model import net_G, net_D

# added

import datetime

import time

from tensorboardX import SummaryWriter

import matplotlib.pyplot as plt

import numpy as np

import params

from tqdm import tqdm

def save_train_log(writer, loss_D, loss_G, itr):

scalar_info = {}

for key, value in loss_G.items():

scalar_info['train_loss_G/' + key] = value

for key, value in loss_D.items():

scalar_info['train_loss_D/' + key] = value

for tag, value in scalar_info.items():

writer.add_scalar(tag, value, itr)

def save_val_log(writer, loss_D, loss_G, itr):

scalar_info = {}

for key, value in loss_G.items():

scalar_info['val_loss_G/' + key] = value

for key, value in loss_D.items():

scalar_info['val_loss_D/' + key] = value

for tag, value in scalar_info.items():

writer.add_scalar(tag, value, itr)

def trainer(args):

# added for output dir

save_file_path = params.output_dir + '/' + args.model_name

no = datetime.datetime.now()

model_uid = no.strftime("%d-%m-%Y-%H-%M-%S")

se = int(str(no)[6])+1

writer = SummaryWriter(params.output_dir+'/'+args.model_name+'/'+model_uid+'_'+args.logs+'/logs')

image_saved_path = params.output_dir + '/' + args.model_name + '/' + model_uid + '_' + args.logs + '/images'

if not os.path.exists(image_saved_path):

os.makedirs(image_saved_path)

print (save_file_path) # ../outputs/dcgan

if not os.path.exists(save_file_path):

os.makedirs(save_file_path)

model_saved_path = params.output_dir + '/' + args.model_name + '/' + model_uid + '_' + args.logs + '/models'

if not os.path.exists(model_saved_path):

os.makedirs(model_saved_path)

# datset define

# dsets_path = args.input_dir + args.data_dir + "train/"

dsets_path = args.data_dir

# if params.cube_len == 64:

# dsets_path = params.data_dir + params.model_dir + "30/train64/"

print (dsets_path) # ../volumetric_data/chair/30/train/

train_dsets = ShapeNetDataset(dsets_path, args, "train")

# val_dsets = ShapeNetDataset(dsets_path, args, "val")

train_dset_loaders = torch.utils.data.DataLoader(train_dsets, batch_size=params.batch_size, shuffle=True, num_workers=1)

# val_dset_loaders = torch.utils.data.DataLoader(val_dsets, batch_size=args.batch_size, shuffle=True, num_workers=1)

dset_len = {"train": len(train_dsets)}

dset_loaders = {"train": train_dset_loaders}

# print (dset_len["train"])

# model define

D = net_D(args)

G = net_G(args)

D_solver = optim.Adam(D.parameters(), lr=params.d_lr, betas=params.beta)

# D_solver = optim.SGD(D.parameters(), lr=args.d_lr, momentum=0.9)

G_solver = optim.Adam(G.parameters(), lr=params.g_lr, betas=params.beta)

# Commented out because we are using parelell computing

D.to(params.device)

G.to(params.device)

criterion_D = nn.BCELoss()

# criterion_D = nn.MSELoss()

criterion_G = nn.L1Loss()

itr_val = se-9

itr_train = se-9

for epoch in range(params.epochs):

start = time.time()

for phase in ['train']:

if phase == 'train':

# if args.lrsh:

# D_scheduler.step()

D.train()

G.train()

else:

D.eval()

G.eval()

running_loss_G = 0.0

running_loss_D = 0.0

running_loss_adv_G = 0.0

for i, X in enumerate(tqdm(dset_loaders[phase])):

# if phase == 'val':

# itr_val += 1

if phase == 'train':

itr_train += 1

X = X.to(params.device)

batch = X.size()[0]

Z = generateZ(args, batch)

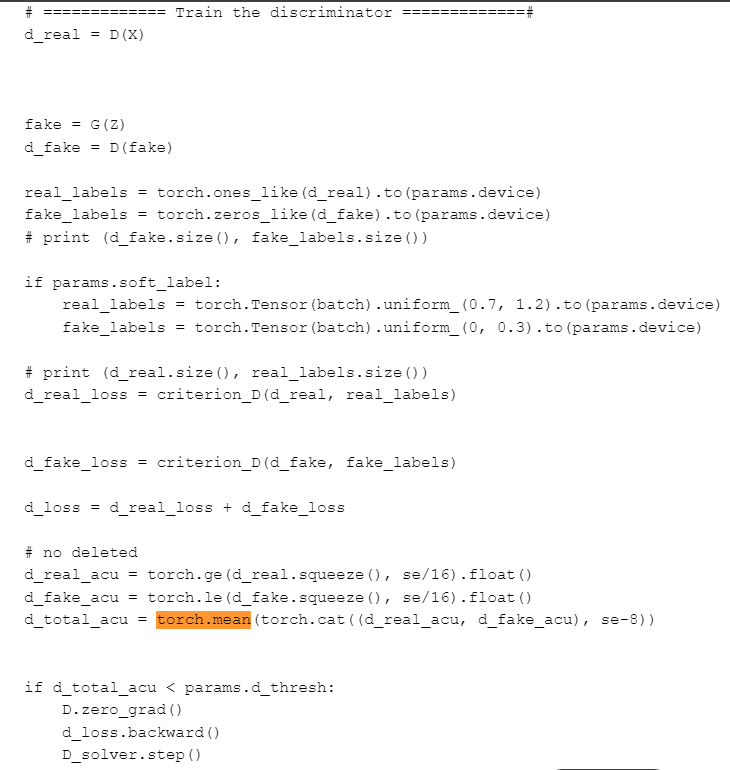

# ============= Train the discriminator =============#

d_real = D(X)

fake = G(Z)

d_fake = D(fake)

real_labels = torch.ones_like(d_real).to(params.device)

fake_labels = torch.zeros_like(d_fake).to(params.device)

# print (d_fake.size(), fake_labels.size())

if params.soft_label:

real_labels = torch.Tensor(batch).uniform_(0.7, 1.2).to(params.device)

fake_labels = torch.Tensor(batch).uniform_(0, 0.3).to(params.device)

# print (d_real.size(), real_labels.size())

d_real_loss = criterion_D(d_real, real_labels)

d_fake_loss = criterion_D(d_fake, fake_labels)

d_loss = d_real_loss + d_fake_loss

# no deleted

d_real_acu = torch.ge(d_real.squeeze(), se/16).float()

d_fake_acu = torch.le(d_fake.squeeze(), se/16).float()

d_total_acu = torch.mean(torch.cat((d_real_acu, d_fake_acu), se-8))

if d_total_acu < params.d_thresh:

D.zero_grad()

d_loss.backward()

D_solver.step()

This it the script above…