After epoch 1 it is throwing this error. Batch_size is 50.

Epoch 1, Batch 1 loss: 5.308178

‘’’

Building Model

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def init(self):

super(Net, self).init()

self.conv1 = nn.Conv2d(in_channels = 1, out_channels = 32, kernel_size = 3, padding=1, stride=1)

self.relu = nn.ReLU()

self.maxpool = nn.MaxPool2d(kernel_size = 2)

self.conv2 = nn.Conv2d(in_channels = 32, out_channels = 64, kernel_size = 3, padding=1, stride=1)

self.conv3 = nn.Conv2d(in_channels = 64, out_channels = 128, kernel_size = 3, padding=1, stride=1)

self.conv4 = nn.Conv2d(in_channels = 128, out_channels = 256, kernel_size = 3, padding=1, stride=1)

#nn.Flatten()

self.fc1 = nn.Linear(in_features = 16384, out_features = 8192)

self.fc2 = nn.Linear(in_features = 8192, out_features = 256)

self.linear1 = nn.Linear(in_features = 256, out_features = 100) # For age class output

self.linear2 = nn.Linear(in_features = 256, out_features = 2) # For gender class output

def forward(self, x):

out = self.conv1(x)

out = self.relu(out)

out = self.maxpool(out)

out = self.conv2(out)

out = self.relu(out)

out = self.maxpool(out)

out = self.conv3(out)

out = self.relu(out)

out = self.maxpool(out)

out = self.conv4(out)

out = self.relu(out)

out = self.maxpool(out)

out = out.view(x.size(0),-1)

out = F.sigmoid(self.fc1(out))

out = F.sigmoid(self.fc2(out))

label1 = torch.sigmoid(self.linear1(out)) # Age output

label2 = torch.sigmoid(self.linear2(out)) # Gender output

return {'label1': label1, 'label2': label2}

‘’’

‘’’

import torch.optim as optim

For multilabel output:Age

criterion_multioutput = nn.CrossEntropyLoss()

For binary output:Gender

criterion_binary = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001)

‘’’

‘’’

def train_model(model, criterion1, criterion2, optimizer, n_epochs=25):

“”“returns trained model”“”

# initialize tracker for minimum validation loss

valid_loss_min = np.Inf

for epoch in range(1, n_epochs):

train_loss = 0.0

valid_loss = 0.0

# train the model #

model.train()

for batch_idx, sample_batched in enumerate(train_dataloader):

image, label1, label2 = sample_batched

# importing data and moving to CPU

image, label1, label2 = sample_batched[X_train].to(device),\

sample_batched[y_age_train].to(device),\

sample_batched[y_gender_train].to(device)

print(type(sample_batched))

# zero the parameter gradients

optimizer.zero_grad()

output = model(image.float())

output = model(image.float())

print(output)

label1_hat = output['label1']

label2_hat = output['label2']

#label3_hat=output['label3']

# calculate loss

print(label1.shape)

loss1 = criterion1(label1_hat, label1.squeeze().type(torch.LongTensor))

loss2 = criterion2(label2_hat, label2.squeeze().type(torch.LongTensor))

#loss3=criterion1(label3_hat, label3.squeeze().type(torch.LongTensor))

loss = loss1+loss2

# back prop

loss.backward()

# grad

optimizer.step()

train_loss = train_loss + ((1 / (batch_idx + 1)) * (loss.data - train_loss))

if batch_idx % 50 == 0:

print('Epoch %d, Batch %d loss: %.6f' %

(epoch, batch_idx + 1, train_loss))

# validate the model #

model.eval()

for batch_idx, sample_batched in enumerate(test_dataloader):

image, label1, label2 = sample_batched

image, label1, label2 = sample_batched[X_test].to(device),\

sample_batched[y_age_test].to(device),\

sample_batched[y_gender_test].to(device),\

#sample_batched[‘label_race’].to(device)

print(type(image))

output = model(image.float())

output = model(image.float())

label1_hat = output['label1']

label2_hat = output['label2']

#label3_hat=output['label3']

# calculate loss

loss1=criterion1(label1_hat, label1.squeeze().type(torch.LongTensor))

loss2=criterion2(label2_hat, label2.squeeze().type(torch.LongTensor))

#loss3=criterion1(label3_hat, label3.squeeze().type(torch.LongTensor))

loss = loss1+loss2

valid_loss = valid_loss + ((1 / (batch_idx + 1)) * (loss.data - valid_loss))

# print training/validation statistics

print('Epoch: {} \tTraining Loss: {:.6f} \tValidation Loss: {:.6f}'.format(

epoch, train_loss, valid_loss))

## TODO: save the model if validation loss has decreased

if valid_loss < valid_loss_min:

torch.save(model, 'model.pt')

print('Validation loss decreased ({:.6f} --> {:.6f}). Saving model ...'.format(

valid_loss_min,

valid_loss))

valid_loss_min = valid_loss

# return trained model

return model

‘’’

‘’’

model_conv = train_model(net, criterion_multioutput, criterion_binary, optimizer)

‘’’

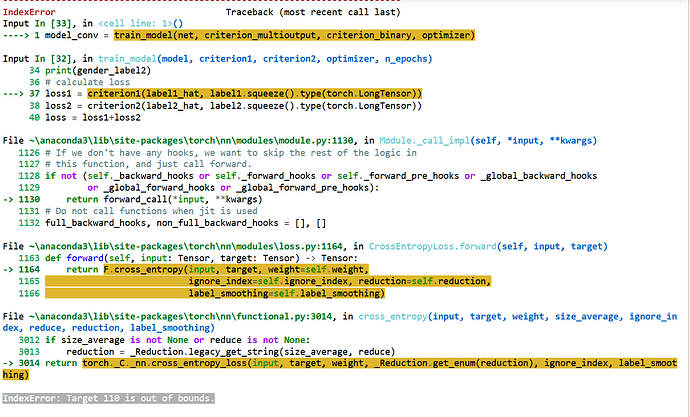

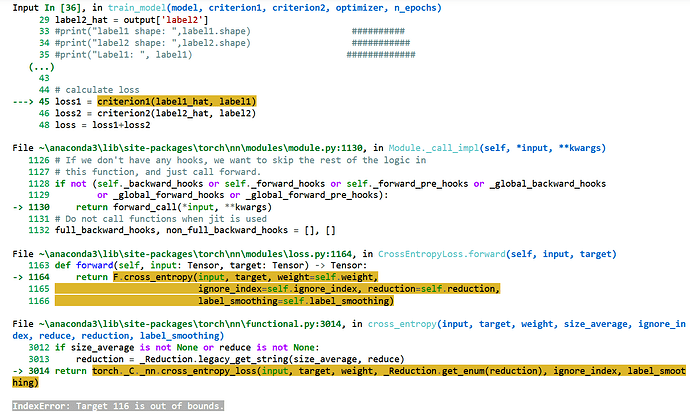

IndexError Traceback (most recent call last)

Input In [56], in <cell line: 1>()

----> 1 model_conv = train_model(net, criterion_multioutput, criterion_binary, optimizer)

Input In [55], in train_model(model, criterion1, criterion2, optimizer, n_epochs)

29 #label3_hat=output[‘label3’]

30 # calculate loss

31 print(label1.shape)

—> 32 loss1 = criterion1(label1_hat, label1.squeeze().type(torch.LongTensor))

33 loss2 = criterion2(label2_hat, label2.squeeze().type(torch.LongTensor))

34 #loss3=criterion1(label3_hat, label3.squeeze().type(torch.LongTensor))

File ~\anaconda3\lib\site-packages\torch\nn\modules\module.py:1130, in Module._call_impl(self, *input, **kwargs)

1126 # If we don’t have any hooks, we want to skip the rest of the logic in

1127 # this function, and just call forward.

1128 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1129 or _global_forward_hooks or _global_forward_pre_hooks):

→ 1130 return forward_call(*input, **kwargs)

1131 # Do not call functions when jit is used

1132 full_backward_hooks, non_full_backward_hooks = [], []

File ~\anaconda3\lib\site-packages\torch\nn\modules\loss.py:1164, in CrossEntropyLoss.forward(self, input, target)

1163 def forward(self, input: Tensor, target: Tensor) → Tensor:

→ 1164 return F.cross_entropy(input, target, weight=self.weight,

1165 ignore_index=self.ignore_index, reduction=self.reduction,

1166 label_smoothing=self.label_smoothing)

File ~\anaconda3\lib\site-packages\torch\nn\functional.py:3014, in cross_entropy(input, target, weight, size_average, ignore_index, reduce, reduction, label_smoothing)

3012 if size_average is not None or reduce is not None:

3013 reduction = _Reduction.legacy_get_string(size_average, reduce)

→ 3014 return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing)

IndexError: Target 103 is out of bounds.

I tried to solve this error but it could not. Thank you in advance.