Hi, I use nn.CrossEntropyLoss() for segmentation, but I have this error

IndexError Traceback (most recent call last)

in ()

26 labels=labels[…,0].squeeze()

27 labels = labels.squeeze_()

—> 28 loss1 = criterion1(logits_masks,masks1.long())

29 loss2 = criterion1(logits_labels,labels)

30 #print(‘loss1’,loss1)

2 frames

/usr/local/lib/python3.7/dist-packages/torch/nn/functional.py in cross_entropy(input, target, weight, size_average, ignore_index, reduce, reduction)

2822 if size_average is not None or reduce is not None:

2823 reduction = _Reduction.legacy_get_string(size_average, reduce)

→ 2824 return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index)

2825

2826

IndexError: Target 1 is out of bounds.

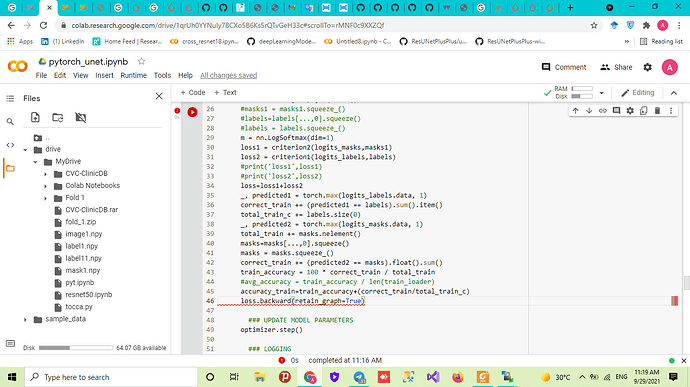

for i, dat in enumerate(train_generator):

j+=1

features, masks,labels = dat

features = features.to(device).float()

masks = masks.to(device)

labels = labels.to(device)

optimizer.zero_grad()

### FORWARD AND BACK PROP

features = features.permute(0, 3, 1,2)

logits_labels,logits_masks = model((features))

masks1=masks[...,0].squeeze()

#masks1 = masks1.squeeze_()

labels=labels[...,0].squeeze()

labels = labels.squeeze_()

loss1 = criterion1(logits_masks,masks1.long())

loss2 = criterion1(logits_labels,labels)

#print('loss1',loss1)

#print('loss2',loss2)

loss=loss1+loss2