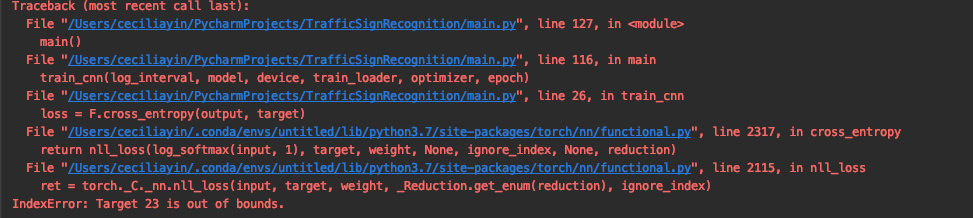

I’m training a CNN model and got this error:

This is my code for training the model:

def train_cnn(log_interval, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

output = model(data)

loss = F.cross_entropy(output, target)

# losses = []

# losses.append(loss)

loss.backward()

optimizer.step()

if batch_idx % log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

This is my model

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# Define 2D convolution layers

# 3: input channels, 32: output channels, 5: kernel size, 1: stride

self.conv1 = nn.Conv2d(3, 32, 5, 1) # The size of input channel is 3 because all images are coloured

self.conv2 = nn.Conv2d(32, 64, 5, 1)

self.conv3 = nn.Conv2d(64, 128, 3, 1)

self.conv4 = nn.Conv2d(128, 256, 3, 1)

# It will 'filter' out some of the input by the probability(assign zero)

self.dropout1 = nn.Dropout2d(0.25)

self.dropout2 = nn.Dropout2d(0.5)

# Fully connected layer: input size, output size

self.fc1 = nn.Linear(692224, 128)

self.fc2 = nn.Linear(128, 10)

# forward() link all layers together,

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = self.conv3(x)

x = F.relu(x)

x = self.conv4(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

x = F.relu(x)

x = self.dropout2(x)

x = self.fc2(x)

output = F.log_softmax(x, dim=1)

return output

Any advice would be appreciated. Thanks in advance!