_joker

March 26, 2020, 2:13am

1

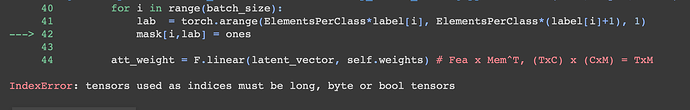

I am getting this error only during the testing phase, but I do not face any problem in the training and validation phase.

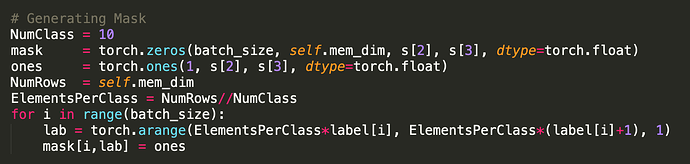

The code snippet looks like the one below,

The “lab” is a tensor value and prints out the range in such a way,

tensor([6, 7, 8])

tensor([ 9, 10, 11])

tensor([21, 22, 23])

(Note*: the length of this lab tensor can be of length ‘n’ based on the value of ElementsPerClass)

1 Like

Could you check, if lab is an empty tensor at one point?torch.arange this might happen, if start and end have equal values.

_joker

March 26, 2020, 6:31am

3

I am testing for NumRows = 10 that is, ElementsPerClass = 1 lab as single value tensors on every iteration like,

tensor([1])

tensor([4])

tensor([7])

as I want a single tile of masking for self.mem_dim = 10