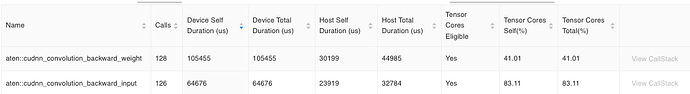

I am using torch.profiler.profile to benchmark my training. While exploring the operator wise runtime in tensorboard it seems that each operator spends a considerable amount of time in host compared to the device time. Attached is the screenshot of one such instance

Here I am analyzing the Device self duration and Host Self Duration. It seems the operator is spending close to 30% of GPU time in the host (For conv_backward_weight GPU time is 105 ms while host time is 30 ms). This seems to be a considerable bottleneck for training. Can I know what kind of host operations are involved in aten::cudnn_convolution_backward_weight (or other similar operators) that leads to such high values of host time?. Also, does this host operation run in parallel to the GPU kernel execution, or do they run synchronously?