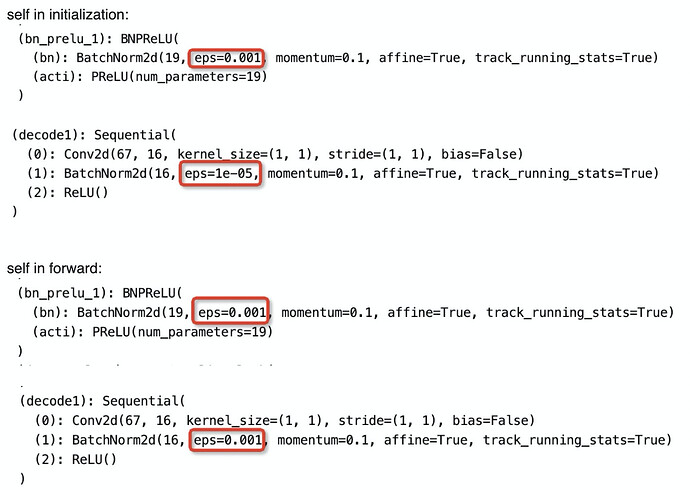

In my network, I first set eps in BN is:

class BNPReLU(nn.Module):

def init(self, nIn):

super().init()

self.bn = nn.BatchNorm2d(nIn, eps=1e-3)

self.acti = nn.PReLU(nIn)

def forward(self, input):

output = self.bn(input)

output = self.acti(output)

return output

Then, I add another BN like this:

Class Network(nn.Module):

self.bn_relu = BNPReLU(nIn)

self.primary_conv = nn.Sequential(

nn.Conv2d(inp, init_channels, kernel_size, stride, kernel_size//2, bias=False),

nn.BatchNorm2d(init_channels),

nn.ReLU(inplace=True) if relu else nn.Sequential(),

)

But, when run my code in model.train(). the eps is set 1e-3 in all places. So, I debug this network and find the eps is set correctly in network initialization, but when go into forward(), all the eps is set 1e-3. I don’t know why