Hi team,

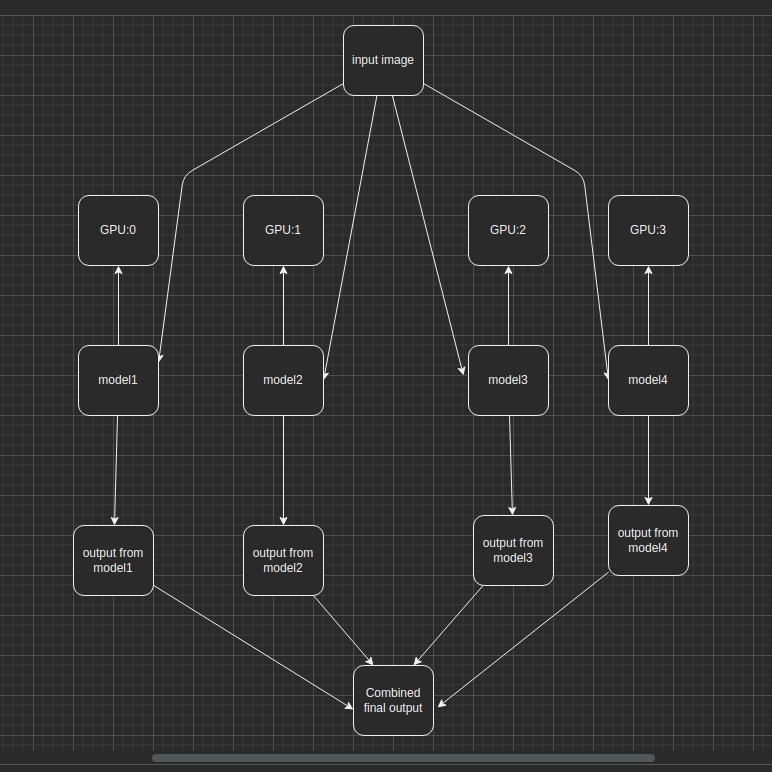

I have built 4 models and all four models require the same input data to do prediction finally, all four prediction results should be combined together to form a single output.

I’m using p3.8xlarge machine, which has 4 GPU and 32 vCPU cores.

Is it possible to attach 4 different models to 4 different GPUs?

like below,

model1 = model1.cuda(0) # 0 is gpu id

model2 = model2.cuda(1) # 1 is gpu id

model3 = model3.cuda(2) # 2 is gpu id

model4 = model4.cuda(3) # 3 is gpu id

and how to call the above four models parallelly for prediction?

like pass the input to the four models at the same and get the prediction out of it.

something like the below flow diagram.