Its taking me to install pytorch 1.10 with CUDA 11.3 almost 8-9 hours? Is that ok? or something wrong? I am using GeForce RTX 3090

Something seems to be wrong.

Installing the pip wheels / conda binaries would take seconds or minutes depending on your internet connection while building from source could take between 15-120 mins on x86 depending on your workstation, the selected architectures etc., potentially much more on embedded devices if you build natively on them.

Could you describe your install approach and what kind of output you are seeing?

@ptrblck is there a way to avoid having pytorch install the CUDA runtime if I have everything installed on the system already, but still use pre-compiled binaries?

The sizes involved here are a bit insane to me: 1GB for pytorch conda package, almost 1GB for cuda conda package, and ~2GB for pytorch pip wheels.

Why do you force the CUDA package requirement on the CUDA-enabled pytorch conda package ?

I’d like to use pytorch in CI, but given the sizes involved here (and time for compilation from source), I’m not sure I want to use pytorch at all anymore

The binaries ship with the CUDA runtime for ease of use, as often users struggle to install the local CUDA toolkit with other libraries such as cuDNN and NCCL locally.

To use the GPU on your system in PyTorch you would thus only need to install the correct NVIDIA driver and one of the binary packages.

Building from source would use your local setup and would of course avoid having to download the binaries.

I would be more interested in knowing if we need cudatoolkit=11.1:

conda install -y pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch -c nvidia

If CUDA is already installed?

Yes it’s needed, since the binaries ship with their own libraries and will not use your locally installed CUDA toolkit unless you build PyTorch from source or a custom CUDA extension.

- Nvidia-smi: CUDA Version: 11.2

- PyTorch install: CUDA 11.3 or 11.6?

Local machine

nvidia-smi

Tue Aug 30 11:38:20 2022

±----------------------------------------------------------------------------+

| NVIDIA-SMI 462.09 Driver Version: 462.09 CUDA Version: 11.2 |

|-------------------------------±---------------------±---------------------+

| GPU Name TCC/WDDM | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 RTX A3000 Lapto… WDDM | 00000000:01:00.0 Off | N/A |

| N/A 51C P0 29W / N/A | 128MiB / 6144MiB | 0% Default |

| | | N/A |

±------------------------------±---------------------±---------------------+

PyTorch install

- Start Locally | PyTorch currently offers CUDA 11.3 and 11.6.

- Since the command nvidia-smi run on my local machine indicates CUDA Version 11.2, should I choose CUDA 11.3 or CUDA 11.6 when downloading PyTorch?

Thanks a lot for your time and any help…

I would recommend to use the latest one, i.e. 11.6 in your case.

I appreciated all your all patient and elaborate answers about CUDA prerequisite. Sorry a quick question again:

If I have CUDA toolkit installed, compiled some CUDA extensions and uninstalled CUDA toolkit again. Do the CUDA extensions still work? I am not sure whether they dynamic link to CUDA tookit.

I asked such a weird question because I am using a docker image which does not include CUDA in advance and I have no permission to update this docker image.

I think it depends on your workflow and how you’ve built the CUDA extension.

I.e. if you’ve created a standalone library via an extension, I would assume it should work without the full CUDA toolkit (but might need all dynamically linked libs, which you could check via ldd).

On the other hand, if you are JIT compiling the extension you would need the CUDA toolkit in your environment.

What’s the status right now? Right now it seems that when I run pip install on a machine with GPU it installs the full CUDA version and otherwise it installs much smaller one without CUDA. It happens even in docker, which makes it difficult to ensure consistent builds.

Is there any flag or environment variable to force it?

That’s not correct as pip install torch will install the PyTorch binary with all needed CUDA libraries from PyPI. This workflow allows us to create a small PyTorch binary, which can be posted on PyPI, and use the CUDA libraries from PyPI which can also be shared with other Python packages.

Before this workflow was enabled all CUDA libraries were packaged into the large PyTorch wheel, which had a size of >1.5GB and was thus hosted on a custom mirror (not PyPI due to size limitations).

Yes, I was not clear. I noticed that at some point they were baked in into the torch package. By CUDA version I meant the version that has all those nvidia-* (nvidia-cuda-runtime-cu11 etc.) as a requirement. So is there a flag to either force or prevent this?

I guess, I can always just manually append them to my pip install, but I’m not sure I won’t miss something that way.

I’m still unsure what the issue is. The CUDA dependencies were always installed but were baked into the wheel before thus creating the large binary of >1.5GB.

If you don’t want to install the pip wheels with any CUDA dependencies you could install the CPU-only wheels as given in the install instruction.

This workflow is also the same as before and for the current release the command would be:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

on Linux systems.

I have a Dockerfile with a line:

RUN pip install torch==1.13.1 torchvision torchaudio

(I’ll try 2.0 later)

When my colleague with GPU, builds this image we get a proper image that we can use for training.

When I build this image on Mac I only get the base torch version, without those CUDA packages.

Check which packages are found and installed since Mac doesn’t support CUDA and would most likely install either the CPU-only binary or the MPS one (I’m not using Mac so don’t know how the package selection would work there).

Ok, I guess I see the problem now. I was confused because, in my case, pip always runs inside docker with regular Ubuntu 22.04. What I didn’t consider is that docker may automatically provide different ubuntu:22.04 depending on my architecture. Since I have M1 with an ARM chip, I got the one with aarch64. The torch installation makes a check for x86_64 when installing the dependencies.

So it all boils down to Intel vs M1 rather then GPU vs. no GPU.

At the end I solved it by using a flag at the beginning of my Dockerfile:

FROM --platform=linux/amd64 ubuntu:22.04

Obviously, it seems a bit slower than using the default ARM, but there is no other choice if you later want to deploy this image on GPU VMs.

Hi all, I am not sure if the conversation is still valid. I have one question:

-

I installed PyTorch with:

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia -

I tried this:

import torch

print(torch.cuda.get_device_name(0))

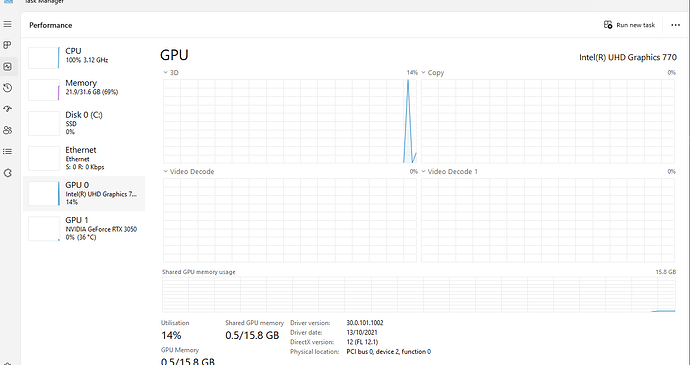

It shows: NVIDIA GeForce RTX 3050

Does it mean I have the GPU ready to use, and lastly, do I now need Cuda to use the GPU?

- How can I download Cuda? Is there any other dependency or package that needs to be installed?

It is because even though I see GPUs, when I run deep learning code, I don’t actually see the GPUs being used.Also, which Cuda toolkit do I download?

Yes, you should be able to use your GPU, as PyTorch is able to communicate with it already. Run a quick smoke test via x = torch.randn(1).cuda() and make sure print(x) is showing a CUDATensor.

No, as explained before: