Hello. I need to pad a tensor in the forward of a function. On the doc website I can’t find function that can pad a tensor…

I’m assuming that the reason you’re padding a tensor and not a variable is that you don’t need its gradients. If so, couldn’t you turn the tensor into a Variable, then pad it, and then turn it back to a tensor with .data?

1 Like

You could use F.pad from nn.functional:

a = torch.randn(1, 3, 6, 8)

p2d = (1, 1, 2, 2) # pad last dim by (1, 1) and 2nd to last by (2, 2)

F.pad(a, p2d, 'constant', 0)

>> [torch.FloatTensor of size 1x3x10x10]

3 Likes

Which PzTorch version are you using?

In the latest stable version (0.4.0), the operation returns a torch.FloatTensor:

a = torch.randn(1, 3, 6, 8)

p2d = (1, 1, 2, 2) # pad last dim by (1, 1) and 2nd to last by (2, 2)

b = F.pad(a, p2d, 'constant', 0)

print(b.type())

1 Like

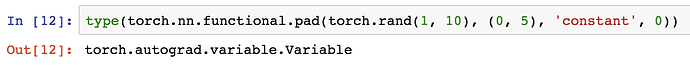

It seems that before 0.4, the output of functional is a Variable…

It seems that before 0.4, the output of functional is a Variable…