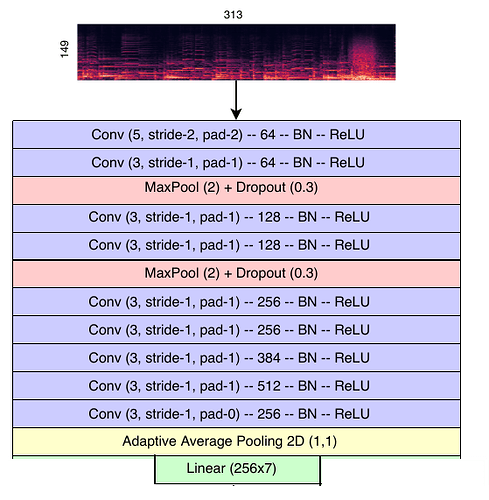

I’m in desperate need of help. I’m very new to PyTorch. What I’ve done is follow the 60-Minute Blitz tutorial and then started trying to implement a VGG-style network described described in this figure:

Here’s a PasteBin link to my code

import torch

class Net(torch.nn.Module):

def __init__(self, n_features):

super(Net, self).__init__()

self.conv1 = torch.nn.Conv2d(in_channels = 1, out_channels = 64, kernel_size = 5, stride = 2, padding = 2)

self.bn1 = torch.nn.BatchNorm2d(num_features = 64)

self.relu1 = torch.nn.ReLU()

self.conv2 = torch.nn.Conv2d(in_channels = 64, out_channels = 64, kernel_size = 3, stride = 1, padding = 1)

self.bn2 = torch.nn.BatchNorm2d(num_features = 64)

self.relu2 = torch.nn.ReLU()

self.mp1 = torch.nn.MaxPool2d(kernel_size = 2)

self.do1 = torch.nn.Dropout2d(p = 0.3)

self.conv3 = torch.nn.Conv2d(in_channels = 64, out_channels = 128, kernel_size = 3, stride = 1, padding = 1)

self.bn3 = torch.nn.BatchNorm2d(num_features = 128)

self.relu3 = torch.nn.ReLU()

self.conv4 = torch.nn.Conv2d(in_channels = 128, out_channels = 128, kernel_size = 3, stride = 1, padding = 1)

self.bn4 = torch.nn.BatchNorm2d(num_features = 128)

self.relu4 = torch.nn.ReLU()

self.mp2 = torch.nn.MaxPool2d(kernel_size = 2)

self.do2 = torch.nn.Dropout2d(p = 0.3)

self.conv5 = torch.nn.Conv2d(in_channels = 128, out_channels = 256, kernel_size = 3, stride = 1, padding = 1)

self.bn5 = torch.nn.BatchNorm2d(num_features = 256)

self.relu5 = torch.nn.ReLU()

self.conv6 = torch.nn.Conv2d(in_channels = 256, out_channels = 256, kernel_size = 3, stride = 1, padding = 1)

self.bn6 = torch.nn.BatchNorm2d(num_features = 256)

self.relu6 = torch.nn.ReLU()

self.conv7 = torch.nn.Conv2d(in_channels = 256, out_channels = 384, kernel_size = 3, stride = 1, padding = 1)

self.bn7 = torch.nn.BatchNorm2d(num_features = 384)

self.relu7 = torch.nn.ReLU()

self.conv8 = torch.nn.Conv2d(in_channels = 384, out_channels = 512, kernel_size = 3, stride = 1, padding = 1)

self.bn8 = torch.nn.BatchNorm2d(num_features = 512)

self.relu8 = torch.nn.ReLU()

self.conv9 = torch.nn.Conv2d(in_channels = 512, out_channels = 256, kernel_size = 3, stride = 1, padding = 0)

self.bn9 = torch.nn.BatchNorm2d(num_features = 256)

self.relu9 = torch.nn.ReLU()

self.aap1 = torch.nn.AdaptiveAvgPool2d(output_size = (1,1))

self.fc1 = torch.nn.Linear(in_features = 256, out_features = n_features)

def forward(self, x):

# Two convolutional layers (all conv layers followed by batch normalization and ReLU activation)

x = self.conv1(x)

x = self.bn1(x)

x = self.relu1(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu2(x)

# Max pooling and dropout

x = self.mp1(x)

x = self.do1(x)

# Two conv layers

x = self.conv3(x)

x = self.bn3(x)

x = self.relu3(x)

x = self.conv4(x)

x = self.bn4(x)

x = self.relu3(x)

# Max pooling

x = self.mp1(x)

x = self.do1(x)

# Five conv layers

x = self.conv5(x)

x = self.bn5(x)

x = self.relu5(x)

x = self.conv6(x)

x = self.bn6(x)

x = self.relu6(x)

x = self.conv7(x)

x = self.bn7(x)

x = self.relu7(x)

x = self.conv8(x)

x = self.bn8(x)

x = self.relu8(x)

x = self.conv9(x)

x = self.bn9(x)

x = self.relu9(x)

x = self.aap1(x)

# Fully connected layer

x = x.view(-1, self.num_flat_features(x)) # view all channels to a single vector for the fc layer

x = self.fc1(x)

return x

def num_flat_features(self, x):

size = x.size()[1:] # all dimensions except the batch dimension

num_features = 1

for s in size:

num_features *= s

return num_features

For some reason the network isn’t learning anything. I just want to know if the network I’ve defined in my code is the same as the model described in the figure, so I can rule it out as the source of error.