I have an image, and I create four rotated versions of it using.

def rotate4dtensor(x):

x90 = x.transpose(2, 3)

x180 = x.flip(2)

x270 = x.transpose(2, 3).flip(3)

return [x,x90,x180,x270]

and now when during training I call the function in this manner

target = target.cuda()

input = input.cuda()

input = torch.autograd.Variable(input)

target_var = torch.autograd.Variable(target)

output = model(input)

loss = criterion(output, target_var)

#####vectorized version

losses_rot = []

#rotation loss for supervised

sup_rot = rotate4dtensor(input); tgts = []

sup_rot4 = torch.stack(sup_rot)

out = model(sup_rot4,is_rotation=True)

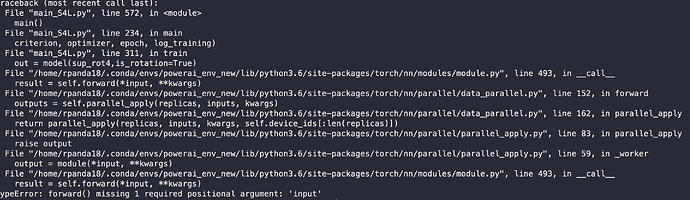

It throws the following error when no of gpus 3,5,6 but works fine with 1,2,and 4 why?