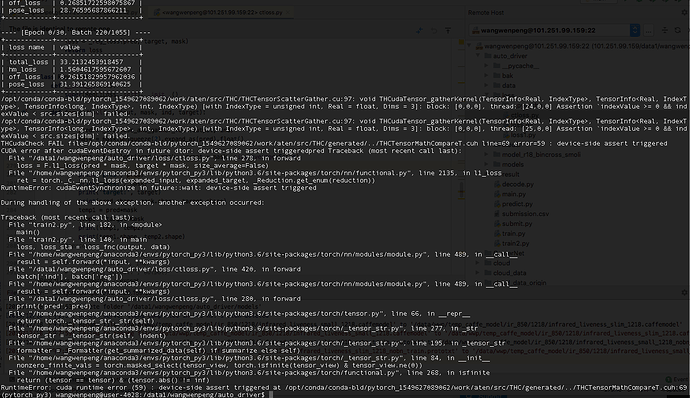

It suddenly show this error, and i have check the shape of l1loss parameters, anyone helps? @ptrblck

my code as follows:

class RegL1Loss(nn.Module):

def __init__(self):

super(RegL1Loss, self).__init__()

def forward(self, output, mask, ind, target):

try:

pred = _tranpose_and_gather_feat(output, ind)

mask = mask.unsqueeze(2).expand_as(pred).float()

loss = F.l1_loss(pred * mask, target * mask, size_average=False)

except:

print('pred', pred)

print('mask:', mask)

print('target:', target)

print(pred.shape, mask.shape, target.shape)

temp1 = pred*mask

temp2 = target*mask

print(temp1, temp2)

print(temp1.shape, temp2.shape)

# except:

# print('output shape:', output.shape, 'ind shape:', ind.shape, 'mask shape:', mask.shape)

# print('ind:', ind)

# print('output:', output)

# assert 1==2

loss = loss / (mask.sum() + 1e-4)

return loss